Humanity at the Crossroads: Ethical Implications of AI in Medicine

Humanity at the Crossroads: Ethical Implications of AI in Medicine

The integration of artificial intelligence (AI) in healthcare has ushered in a new era of medical advancements, but not without raising significant ethical concerns. As AI systems become more prevalent in fields like radiology, emergency medicine, and telehealth, the challenge lies in addressing fundamental issues such as patient consent, data privacy, and implicit biases that these technologies often overlook.Unraveling the Bias in Algorithms

AI’s promise in healthcare is undeniable, with its ability to uncover hidden disease patterns and predict illnesses. However, the reliance on historical data can exacerbate existing biases, particularly affecting marginalized communities such as the LGBTQIA+ and certain ethnic and racial groups. As highlighted in the article, addressing these biases during the initial implementation phase is crucial to enhancing outcomes more effectively than traditional methods.

Despite the importance of this issue, a study by du Toit et al. revealed that many peer-reviewed articles fail to address algorithm biases adequately. Out of 63 articles on hypertension, none tackled the bias issue, underscoring the need for healthcare and AI professionals to develop stringent measures to identify and rectify such biases.

Empowering Patients Through Informed Consent

Patient autonomy remains a cornerstone of medical ethics, especially in the AI era. Older patients with multiple chronic conditions often express skepticism toward AI-based modalities. An ethical AI system must ensure that these patients are informed about the benefits and have the option to opt out. Transparency in this process fosters trust and addresses concerns about data security and privacy.

Responsible Deployment of AI Technologies

Regulatory bodies like the U.S. Food and Drug Administration (FDA) play a pivotal role in the ethical deployment of AI in healthcare. Establishing comprehensive guidelines and conducting frequent assessments are essential to maintaining transparency and accountability. The article emphasizes the need for a dynamic regulatory framework to prevent AI misuse and ensure patient welfare.

Liability Concerns and AI Hallucinations

Liability concerns present a complex challenge in the event of adverse outcomes. While physicians hold primary responsibility for AI technology selection, manufacturers must also ensure product safety and efficacy. The emergence of AI-specific liability insurance offers a novel solution to managing malpractice claims.

Moreover, healthcare providers must address AI hallucinations—AI-generated misinformation not based on real data. Recognizing the limitations of AI and its inability to replace personalized care is crucial.

Emotions and the Ethical Use of AI in Healthcare

AI’s impact on patient emotions is significant. The introduction of AI-powered diagnostic tools can lead to increased patient distress if not communicated empathetically. Ensuring equity and fairness in AI algorithms is imperative to prevent biases that affect emotional well-being.

Preserving the empathetic human connection in an AI-driven healthcare landscape is paramount. While AI can streamline tasks, it cannot replace the irreplaceable role of human healthcare practitioners in addressing emotional needs.

As AI continues to transform healthcare, it is vital to prioritize ethical considerations. By actively engaging in ethical discussions, the healthcare industry can harness AI’s full potential to improve patient outcomes while upholding the values of medicine.

More Articles

Getting licensed or staying ahead in your career can be a journey—but it doesn’t have to be overwhelming. Grab your favorite coffee or tea, take a moment to relax, and browse through our articles. Whether you’re just starting out or renewing your expertise, we’ve got tips, insights, and advice to keep you moving forward. Here’s to your success—one sip and one step at a time!

The Financial Impact of ESG Tools on Investment Portfolios: An In-depth Review

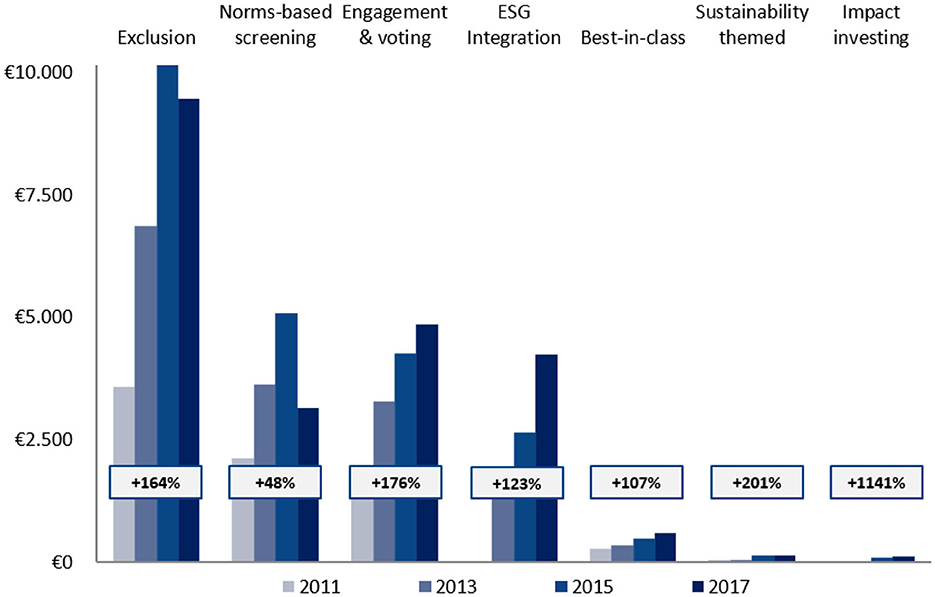

In a world where investment strategies are constantly evolving, the recent publication from Frontiers has shed light on the intersection of responsible investing and financial performance. This comprehensive review explores how Environmental, Social, and Governance (ESG) tools impact the return/risk profile of investment portfolios.

The paper, titled “Environmental, social, and governance tools and investment fund portfolio characteristics: a practical-question-oriented review,” delves into the practical implications of ESG factors on investment returns. It highlights the increasing use of ESG tools such as exclusion, best-in-class practices, and engagement, examining their effects across different asset classes and regions.

Notably, the review concludes that ESG factors generally contribute positively or neutrally to financial returns, suggesting that socially responsible investing is viable without compromising profitability. This finding is particularly relevant in the context of global initiatives like the Paris Climate Agreement, which has spurred a shift in investor preferences towards sustainability.

Key Insights from the Review

The review identifies several key trends and developments in ESG investment. There is a growing emphasis on sustainability legislation, with regions like Europe leading the way in developing laws and regulations surrounding ESG investing. This is reflected in the proportion of sustainable investments relative to total assets under management, which has increased globally, except in Europe.

Another notable trend is the exclusion of fossil fuels from investment portfolios, a strategy that aims to reduce CO2 footprints. The review cites research indicating that excluding fossil energy does not adversely affect portfolio performance, challenging conventional wisdom.

Impact of ESG Tools

The review examines three primary ESG tools: exclusion, best-in-class, and engagement. Exclusion, the most popular tool, can have mixed financial outcomes. Systematically excluding entire sectors may decrease portfolio returns, while excluding specific companies often has no negative impact and may even enhance returns.

The best-in-class approach, which selects companies leading in ESG within their sectors, generally has a positive or neutral effect on risk-adjusted returns. Meanwhile, successful engagement activities positively impact both intangible and financial returns, with the success of engagement largely dependent on the materiality of the engagement topic.

Looking Ahead

As the ESG landscape continues to evolve, the review calls for further research on the financial effects of responsible investment, particularly in the areas of bonds and real estate. It also highlights the importance of understanding investor preferences and the role of ESG in mitigating risks and enhancing long-term returns.

For more detailed insights, you can read the full article on Frontiers.

Top Real Estate Investment Apps for 2025

In the ever-evolving world of real estate investment, the digital age has ushered in a new era of convenience and accessibility. According to a recent Business Insider article, the best apps for real estate investors in February 2025 offer a range of features designed to cater to both accredited and non-accredited investors. These apps are not just about low fees and dividend payouts; they also provide access to pre-vetted properties and property managers.

Among the top contenders is RealtyMogul, hailed as the best overall real estate investing app. This platform offers a seamless experience for investors by providing pre-vetted public, non-traded REITs, making it accessible to all investors regardless of their accreditation status. For a more detailed review, you can visit the RealtyMogul review.

For those who are new to the investment scene or do not meet the criteria for accreditation, Fundrise emerges as a top choice. With a low entry point of just $10, Fundrise offers a variety of investment options, including electronic real estate funds and IPOs. More insights can be found in the Fundrise review.

Investors looking to diversify into alternative asset classes might find Yieldstreet appealing. This platform provides access to unique investments such as legal finance and art, alongside traditional real estate options. However, it is primarily geared towards accredited investors. To learn more, check out the Yieldstreet review.

For short-term real estate investments, Groundfloor offers a compelling proposition. This platform is suitable for both accredited and non-accredited investors, with investment terms ranging from 30 days to 36 months. The platform boasts a history of a 10% annual return. For further reading, visit the Groundfloor review.

Finally, for those who meet the accreditation requirements and are willing to invest at least $5,000, EquityMultiple offers a wide array of investment options in commercial real estate. This platform is ideal for investors looking to explore various property types. More details can be found in the EquityMultiple review.

In summary, the landscape of real estate investing has been significantly transformed by these innovative apps. Whether you’re a seasoned investor or just starting, these platforms offer a variety of options to suit different investment goals and risk appetites. For more comprehensive insights, you can explore the original Business Insider article.

Revolutionizing Real Estate: Top 6 PropTech Trends Shaping 2025 to 2028

First up, eSigning is becoming the norm. The pandemic accelerated the adoption of digital signatures, and the global market is projected to grow at an impressive 41.2% annually from 2024 through 2033. Companies like HelloSign, acquired by Dropbox, and DocuSign, which entered the digital notary space by acquiring LiveOak Technologies, are leading the charge. This shift not only offers flexibility and security but also paves the way for smart contracts on the blockchain, which Deloitte calls “the next big thing in commercial real estate.”

Next, the real estate industry is tapping into proprietary advertising solutions. Platforms like Audience Town and Nextdoor are providing custom solutions to enhance real estate advertising. Audience Town recently secured $2.1 million to expand its platform, while Nextdoor’s hyper-localized campaigns continue to grow, with an IPO on the horizon.

Rental property management and automation are also taking off. Companies like Knock CRM and ManageCasa are automating property management tasks, increasing efficiency for property owners. Knock CRM raised $20 million to expand its SaaS platform, while ManageCasa partnered with Stripe to automate rent payments and property expenses.

Interest in fractional real estate investments is rising, fueled by the success of retail investing platforms. Proptech companies like Republic, Fundrise, and Groundfloor offer low barriers to entry, making real estate investment more accessible to the masses. The global crowdfunding real estate market is expected to skyrocket from $13 billion in 2018 to nearly $870 billion by 2027.

Smart homes are becoming the norm, especially among Gen-Z renters who prioritize smart-home tech over traditional amenities. Companies like Ecobee and SmartRent are leading the charge, with SmartRent raising $60 million to expand its offerings. The household penetration of smart home devices is expected to grow from 52.4% to 75.1% by 2028.

Finally, the rise of iBuyers is reshaping the real estate landscape. Companies like Opendoor, which recently went public via a SPAC IPO, offer quick sales and convenience, appealing to a growing number of sellers. While iBuying currently holds about 1% of the total residential real estate market, it is poised for significant growth in the coming years.

As we look to the future, these proptech trends promise to disrupt the real estate industry, driven by the rapid adoption of digital and automated solutions. For more insights, check out the full article on Exploding Topics.

Diverse Business Opportunities Await Entrepreneurs in 2025

Exploring Lucrative Business Ideas for 2025

In a world where entrepreneurship is continually evolving, the quest for the next big business idea is an ongoing journey. A recent article from Business News Daily sheds light on 26 promising business ideas to ignite the entrepreneurial spirit in 2025. Let’s delve into some of these innovative concepts that cater to diverse skills and interests, offering avenues to turn passions into profitable ventures.Embrace the Outdoors with a Lawn Care Service

If the outdoors is your sanctuary, a lawn care service might be your calling. With minimal equipment and a love for landscaping, this venture can transform tedious tasks into a rewarding experience. As the article notes, this business can evolve into a full-fledged landscaping company by offering premium services and building a reputable brand.

Drive into the Future with Rideshare Driving

For those hesitant to dive headfirst into entrepreneurship, rideshare driving offers a flexible entry point. Leveraging platforms like Uber and Lyft, aspiring business owners can enjoy the independence of a small business without the logistical burdens. This idea is perfect for individuals seeking a side hustle with minimal commitment.

Pet Sitting: A Pawsitively Rewarding Opportunity

With approximately 70% of U.S. families owning pets, a pet-sitting business presents a lucrative opportunity. This venture allows you to provide peace of mind to pet owners while enjoying the company of furry friends. As highlighted in the article, pet sitting can be seamlessly integrated with other online income streams, making it an ideal choice for multitaskers.

Unleash Creativity with T-shirt Printing

For those with a flair for fashion or humor, launching a T-shirt printing business can be a creative outlet. With the right setup, entrepreneurs can print custom designs or licensed artwork on T-shirts, catering to a global audience through e-commerce platforms.

Clean Up with a Cleaning Service

A cleaning service offers a straightforward path to entrepreneurship with relatively low overhead. By providing additional services like floor waxing or power-washing, new businesses can stand out in a competitive market. The article emphasizes the importance of planning, dedication, and marketing to attract a loyal customer base.

Online Reselling: A Thrifty Business Model

For those with an eye for fashion and sales, online reselling offers a chance to turn thrift finds into profit. Platforms like Poshmark and Mercari provide a starting point for this venture, allowing entrepreneurs to expand into their own resale websites over time.

Teaching from Home: The Rise of Online Education

The demand for online education continues to grow, providing opportunities for entrepreneurs to teach subjects they are passionate about. Whether it’s academic subjects or teaching English as a foreign language, this business model offers flexibility and a global reach.

Conclusion

The article from Business News Daily highlights the diverse range of small business ideas available for aspiring entrepreneurs in 2025. Whether you’re drawn to the digital realm or prefer hands-on ventures, there’s a path to success for everyone. As the business landscape continues to evolve, these ideas present exciting opportunities to turn passions into profitable enterprises.Navigating the Future: Challenges and Opportunities for the Commercial Real Estate Sector

In a rapidly evolving landscape, the commercial real estate sector is poised at a crossroads, presenting both challenges and opportunities for stakeholders. As highlighted in the 2025 Commercial Real Estate Outlook by Deloitte, organizations are urged to strategically navigate the shifting tides to secure a robust position for the future.

Global Economic Forecasts

The economic forecasts for 2024 paint a varied picture across different regions. In the United States, the economic outlook is cautiously optimistic, with potential growth tempered by inflationary pressures. Meanwhile, the Eurozone and Asia-Pacific regions are navigating their own unique challenges and opportunities, as detailed in reports from Deloitte Insights.

Interest Rate Policies

Interest rate policies by major banks such as the Bank of England and the Federal Reserve are pivotal in shaping the economic landscape. These policies have far-reaching implications for the real estate sector, affecting everything from loan maturities to investment strategies.

Technological Advancements and Climate Policies

The rise of artificial intelligence and technological demand is driving unprecedented growth in global data centers. This technological boom is coupled with a growing emphasis on decarbonization efforts, which are reshaping the real estate landscape. The sector is increasingly being influenced by climate change and regulatory adaptations, as noted in the TIME article on climate change’s impact on real estate.

Generational Workforce Shifts

The real estate industry is also experiencing a generational shift in workforce dynamics. As detailed by Deloitte, a new generation of workers is stepping up, bringing fresh perspectives and challenges to the fore. This shift is critical for the industry’s evolution and adaptation to modern demands.

In conclusion, the 2025 Commercial Real Estate Outlook underscores the importance of strategic positioning in the face of evolving global trends. By embracing innovation and sustainability, real estate organizations can not only overcome current challenges but also thrive in the future.

DeepSeek Overtakes ChatGPT as Top AI Contender

In a remarkable turn of events, the artificial intelligence (AI) landscape has been shaken by the unexpected rise of Chinese startup DeepSeek. Launched in January, DeepSeek’s free AI assistant has quickly climbed the ranks, overtaking OpenAI’s ChatGPT as the top app on Apple’s App Store. This swift ascent has sparked concerns about OpenAI’s dominance in the AI sector.

The timing of DeepSeek’s rise coincides with a major announcement from former President Trump, who revealed a joint venture worth $500 billion involving OpenAI, SoftBank, Oracle, and MGX. This initiative aims to bolster U.S. AI infrastructure and maintain American leadership in the sector. As 2025 unfolds, the AI race is set to become even more competitive.

Best-Value AI Stocks

Value investing focuses on identifying stocks trading below their intrinsic worth. Investors often use the price-to-earnings (P/E) ratio to find undervalued stocks. However, it is crucial to consider the reasons behind a stock’s discounted price and whether the gap with peers is likely to close.

- Yiren Digital Ltd.: A fintech company from China, Yiren Digital connects investors with borrowers and offers various financial services. The company is enhancing its AI capabilities and recently joined the China Artificial Intelligence Industry Alliance.

- i3 Verticals, Inc.: Specializing in software solutions for public sectors and healthcare, i3 Verticals leverages AI to boost customer engagement.

- Perion Network Ltd.: Based in Israel, Perion is a global digital advertising company using AI to optimize ad campaigns through its proprietary solutions, SORT and WAVE.

Fastest-Growing AI Stocks

Growth investors seek companies with increasing revenue and earnings per share (EPS), signaling strong business fundamentals. However, relying solely on these metrics can be misleading, so a balanced assessment is necessary.

- Sportradar Group AG: A global sports technology company providing data analytics and AI-driven solutions for sports organizations and media outlets.

- Duolingo, Inc.: The leading mobile learning platform, Duolingo is integrating AI-powered innovations to enhance language learning experiences.

- ODDITY Tech Ltd: A consumer tech company leveraging AI and data science to create digital-first beauty and wellness brands.

AI Stocks With the Most Momentum

Momentum investing involves capitalizing on existing market trends by investing in stocks that have recently outperformed. While AI momentum stocks offer high returns, investors must consider the company’s financials to ensure growth prospects are sustainable.

- Quantum Computing, Inc.: Focused on developing affordable quantum computing solutions, the company has secured partnerships with NASA.

- SoundHound AI, Inc.: Known for its voice recognition technology, SoundHound recently partnered with Rekor Systems to enhance police and emergency vehicles with voice-controlled AI.

- Palantir Technologies, Inc.: Providing data integration and analytics platforms, Palantir recently extended its partnership with the U.S. Army to enhance AI-driven data solutions.

Advantages and Disadvantages of AI Stocks

Advantages

- Mass Disruption: AI’s rapid evolution and widespread applications across industries provide significant growth opportunities.

- Innovation: AI-driven automation enhances efficiency and reduces costs, securing long-term competitive advantages for leading companies.

- Investor Enthusiasm: AI stocks often experience rapid price appreciation due to strong investor sentiment.

Disadvantages

- High Valuations and Market Speculation: Many AI stocks trade at high valuations, posing risks of price corrections.

- Regulatory Risks: Increasing scrutiny from governments may lead to stricter regulations impacting growth prospects.

- Stiff Competition: The AI industry is highly competitive, with major players and emerging startups constantly advancing their technologies.

In conclusion, while AI stocks offer substantial growth potential, investors must carefully navigate high valuations, regulatory uncertainties, and intense competition. Thorough scrutiny of a company’s financials and risk management is essential to avoid speculative bubbles and hype. For more insights, refer to the original article on Investopedia.

California’s Commercial Leasing Landscape Set for Transformation

California’s Commercial Leasing Landscape Set for a Transformation

In a significant legislative shift, California is poised to introduce new protections for commercial tenants starting January 1, 2025. The Commercial Tenant Protection Act, enacted as SB 1103, extends a suite of protections to “Qualified Commercial Tenants” (QCTs) that were traditionally reserved for residential tenants.

Under this new law, QCTs are defined as small enterprises including sole proprietorships, partnerships, limited liability companies, or corporations with five or fewer employees, as well as restaurants with fewer than 10 employees and nonprofits with fewer than 20 employees. These entities often struggle with access to capital, making the protections especially critical.

The legislation mandates several key changes:

- Notice for Rent Increases: Property owners must provide QCTs with at least 30 days’ notice for rent hikes up to 10% over the past year, and a 90-day notice for increases exceeding 10%.

- Automatic Renewal of Tenancies: Month-to-month tenancies will automatically renew unless a termination notice is given 60 days in advance for tenancies longer than a year, or 30 days for shorter durations.

- Language Translation: Lease agreements negotiated in languages such as Spanish, Chinese, Tagalog, Vietnamese, or Korean must be translated and provided to the tenant.

- Building Operating Costs: These must be proportionately allocated among tenants, with detailed documentation provided. Violations may allow tenants to claim damages.

This move, as reported by Holland & Knight, signals a broader trend towards legislative efforts to protect small business operators in commercial settings. For those interested in the full details of how these changes will impact commercial property management, consulting with legal experts from Holland & Knight’s West Coast Real Estate Practice Group is advised.

Flexible Office Sector Booms Amid Hybrid Work Evolution

The flexible office sector is on an upward trajectory, continuing to expand as hybrid work models evolve. According to a recent report from CoworkingCafe, the coworking inventory grew by an impressive 13% in square footage year-over-year as of the third quarter of 2024. This surge is further underscored by a 22% increase in the total number of coworking spaces during the same period.

Suburban and tertiary markets are particularly experiencing significant growth in coworking spaces. Hybrid employees, seeking to avoid lengthy commutes, are increasingly opting to work closer to home. Notably, New Jersey, one of New York’s major suburban markets, saw its coworking space inventory swell by 36% year-over-year, reflecting the robust demand in these regions.

This trend is highlighted in the original article published by Globest, which outlines the continued expansion of flexible office spaces. The article emphasizes how changing work preferences and technological advancements are driving this growth, with expectations for the trend to persist through 2025.

Key Trends and Observations

- The flexible office sector is expanding due to evolving work dynamics.

- Suburban markets are witnessing a rise in coworking spaces as employees seek proximity to home.

- Technological advancements are playing a crucial role in supporting this growth.

As we move forward, the flexible office sector is poised for continued growth, adapting to the needs of a changing workforce. For more detailed insights, visit the full article on Globest.

Navigating North Jersey’s 2025 Real Estate Market: A Forecast for Steady Growth

In the ever-evolving landscape of North Jersey’s real estate market, 2025 promises to be a year of continued growth, albeit at a more measured pace. According to insights from a recent Bergen Record article, home values in New Jersey have been on a consistent upward trajectory. However, the rate of increase is expected to slow, with projections indicating a rise of just 2% to 4% in 2025.

Interest rates are another crucial factor influencing the market. The Federal Reserve’s decision to implement two interest rate cuts in 2024 has brought mortgage rates down from their peak of 8% to a more manageable range of 6% to 7%. This shift is likely to benefit both current homeowners looking to relocate and first-time buyers.

Despite these positive trends, homeowners should brace for a potential rise in home insurance premiums. Factors such as increased property values, inflation, and the growing frequency of national disasters are expected to drive premiums up. A survey by Fitch Ratings reveals that 56% of experts anticipate insurance price hikes in 2025.

The demographic profile of homebuyers is also shifting. The median age for first-time homebuyers reached a record high of 38 years in 2024, according to the National Association of Realtors. This trend is expected to persist as buyers take longer to save for their purchases amid rising home values.

Meanwhile, the rental market in the greater New York metropolitan area is poised for modest rent increases in 2025. Although there has been a nationwide decline in rent prices, the demand for rentals in this region continues to outstrip supply, particularly as more people move from cities to suburban areas seeking more space.

For those interested in further details, the original article from the Bergen Record provides a comprehensive overview of these trends and more. As the North Jersey real estate market continues to evolve, staying informed will be key for both buyers and sellers navigating this dynamic environment.

How Hybrid Work Models Shape the Future of Commercial Real Estate

The Remote Work Revolution

As the pandemic unfolded, office occupancy in major US markets plummeted by a staggering 90% from late February to March 2020. Although there was a partial recovery by the end of 2023, occupancy rates remain at approximately half of their pre-pandemic levels. The ongoing uncertainty surrounding remote work continues to dampen office occupancy, lease revenue, and renewal rates in the commercial real estate sector.

Hybrid Work: A Glimmer of Hope

Despite these challenges, the hybrid work model offers a glimmer of hope. The researchers found that companies expecting employees in the office only one day per week saw a 41% drop in office space demand from 2019 to 2023. In contrast, demand fell by just 9% for those with staff onsite two to three days per week and even grew by 1% for companies with a four to five-day office presence.

Economic Implications

The economic ramifications of declining office values are significant. New York, for instance, experienced the largest dollar decline in office space value, with a $90 billion drop from December 2019 to December 2023. Looking ahead, projections suggest that New York’s office values could remain 47% to 67% below 2019 levels, depending on future workplace norms.

Adapting to Change

The research underscores the importance of adaptation. A “flight to quality” has seen newer office buildings with more amenities fare better, as companies strive to enhance office quality to entice workers back. Moreover, the conversion of vacant office spaces into multifamily residential units is proposed as a viable solution to address vacancies. Programs like New York’s Office Conversion Accelerator are already working toward this goal.

Fiscal Challenges for Urban Areas

The decline in office values has far-reaching implications for urban areas, where taxes from commercial real estate contribute about 10% of overall tax revenue. As tax revenue declines, cities may face tough choices: raising other tax rates or cutting government spending on services, potentially making them less attractive places to live.

This insightful analysis from the University of Chicago Booth School of Business serves as a clarion call for stakeholders in the commercial real estate sector to navigate these changes strategically and creatively.

MetaWealth: Transforming Real Estate Investment with Blockchain

In the fast-paced world of tech funding, where attention often shifts from one buzzword to another, blockchain technology continues to quietly revolutionize industries, despite the current spotlight on AI. A prime example of this evolution is MetaWealth, a startup that is transforming real estate investment through blockchain technology.

In a recent episode of “TechTalk with TFN,” Jack Land, Head of Marketing and UK Growth for MetaWealth, shared insights on how the company is democratizing real estate investment. With their platform, individuals can invest in real estate with as little as $100, utilizing blockchain to tokenize these investments. This not only simplifies the investment process but also makes it accessible to a broader audience.

Revolutionizing Real Estate Investment

“Real estate is a cornerstone of a well-rounded portfolio,” Land explained. “But accessing worthwhile deals is incredibly difficult.” MetaWealth aims to change this by allowing investments to be completed in less than ten minutes, regardless of the investor’s location. This approach is about accessibility and inclusivity, ensuring that property investment is no longer exclusive to high-net-worth individuals.

Founded by Amr Adawi, Darren Carvalho, and Michael Topolinski, MetaWealth is experiencing rapid growth. The company is currently raising funds to further expand its platform, which already serves investors from approximately 25 different countries. Land emphasized their commitment to tackling wealth disparity and promoting diversity by offering educational resources to help investors understand their investments.

Blockchain’s Emerging Role

Despite the challenges facing the Web3 sector, blockchain’s adoption by major companies like Visa and PayPal signals a growing confidence in its potential. MetaWealth is at the forefront of making blockchain technology accessible, aiming to reshape the fintech industry. Based in London, MetaWealth is strategically positioned at the heart of Europe’s tech scene, ready to lead the next wave of fintech innovation.

The future of real estate investment is intertwined with Web3, and MetaWealth is poised to be a key player in this transformation. As blockchain technology matures, MetaWealth’s model demonstrates the potential for technological advancements to create a more inclusive financial landscape.

For more detailed insights, you can read the original article on Tech Funding News.

AI Revolutionizes Facility Management Amidst Labor and Efficiency Challenges

Cupertino, CA — Facility management is at a crossroads, facing unprecedented challenges that threaten its very foundation. Overwhelmed by labor shortages and operational inefficiencies, facility managers are turning to artificial intelligence (AI) as a lifeline. According to a recent survey by QByte AI, AI-powered tools are rapidly reshaping the landscape of facility management in 2025.

The survey highlights that a staggering 80% of managers spend excessive hours hunting for information, while 60% are burdened by unsustainable workloads. This has led to a troubling trend, with 40% contemplating leaving their roles. These statistics underscore a crisis in the industry, demanding immediate attention.

AI is emerging as a beacon of hope. By providing instant access to maintenance data through simple prompts, enabling proactive monitoring, and improving real-time collaboration, AI is poised to alleviate the pressures faced by maintenance teams. As noted by industry expert Sam Manjunath, cofounder of QByte AI, “With facility teams stretched thin, the industry is rapidly embracing AI to remove manual workload burdens.”

The integration of AI-driven Computerized Maintenance Management Systems (CMMS) is transforming how facility managers operate. Companies are investing in AI to streamline operations, reduce unplanned downtime, and enhance overall efficiency. The adoption of AI technologies, such as those developed by QByte AI, is not just a trend but a necessary evolution.

Kim Anderson, a seasoned facility manager, experienced firsthand the transformative power of AI during a critical HVAC system failure. With maintenance records scattered across multiple systems, AI-enabled tools provided the timely insights needed to address the crisis efficiently. “Having AI-powered tools right at my fingertips felt like a breath of fresh air—suddenly, I could focus on what truly mattered instead of drowning in endless details,” Anderson remarked.

This shift towards AI is not only a response to labor shortages but also a strategic move to enhance operational efficiency. As companies navigate the complexities of modern facility management, AI offers a sustainable solution to the challenges of today and tomorrow.

For further insights into how AI is reshaping facility management, the original article can be accessed here.

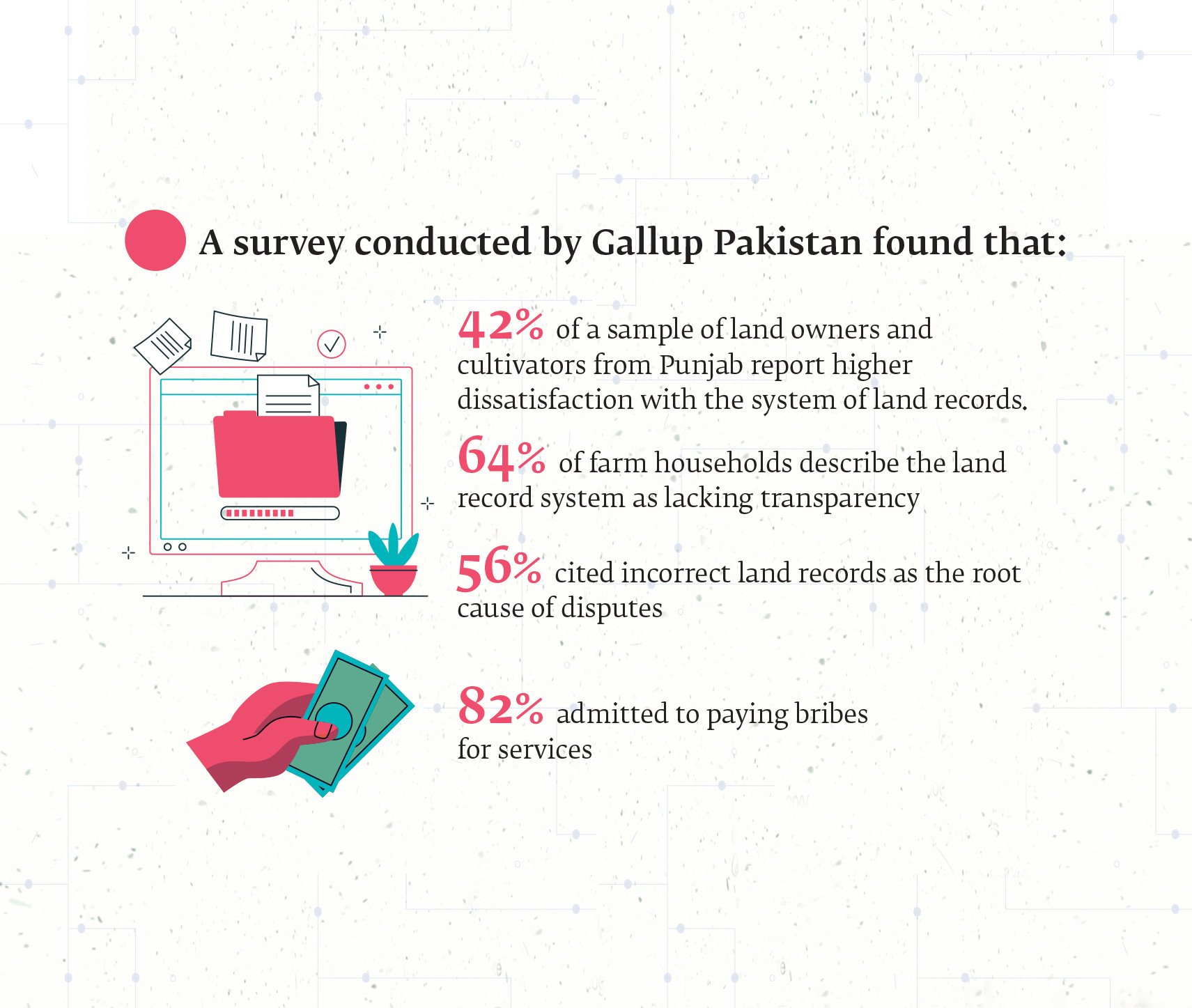

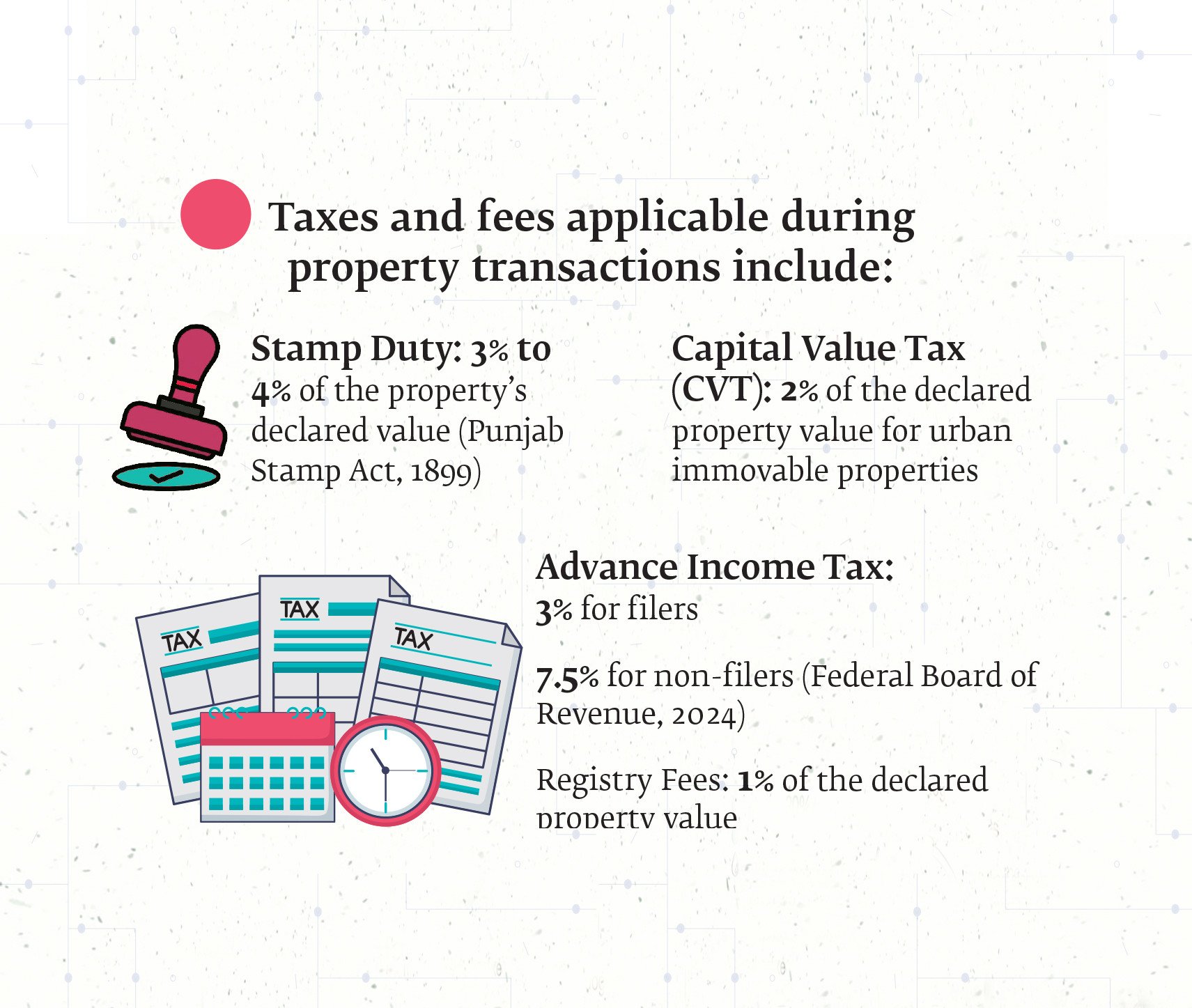

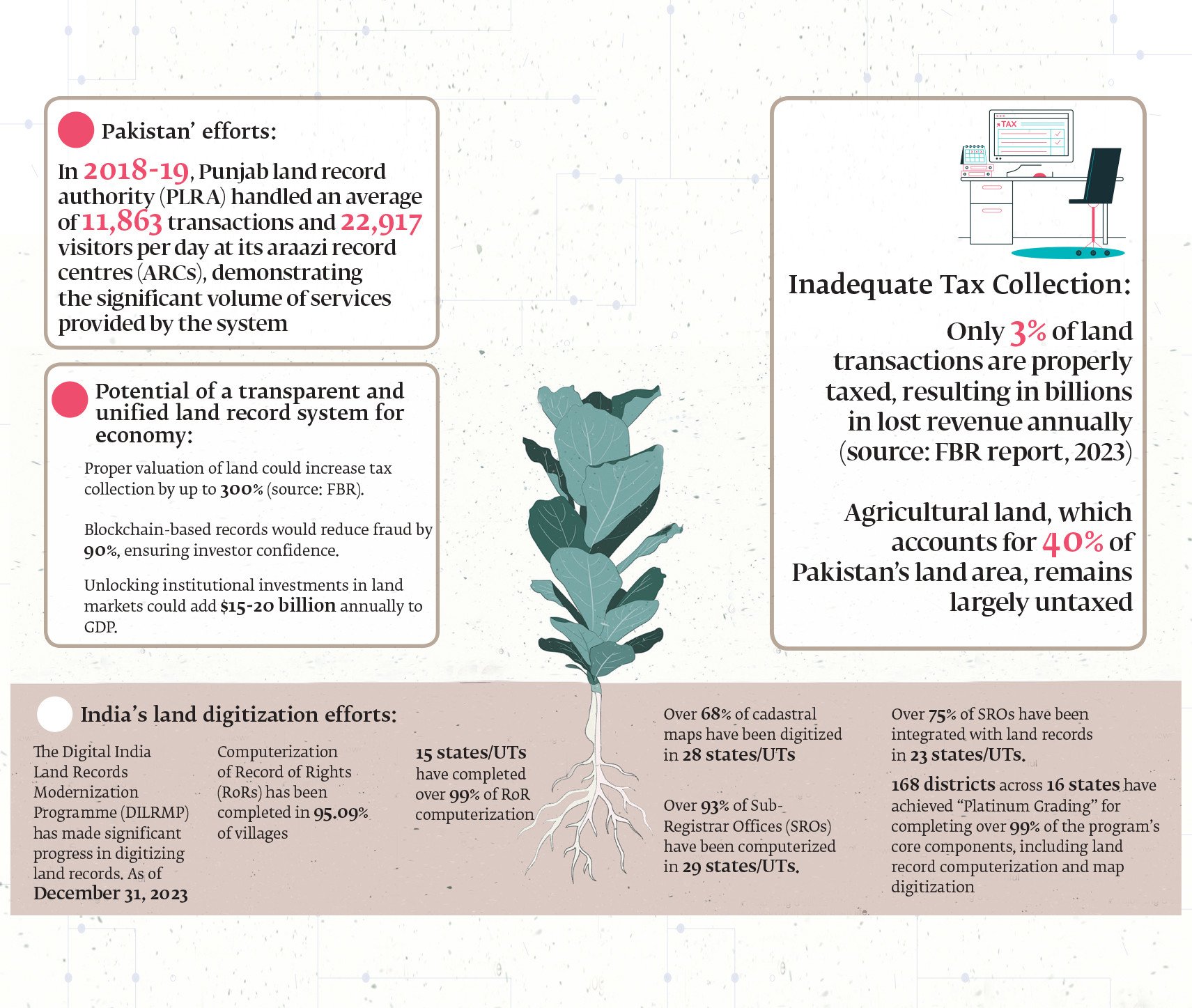

Blockchain to Revolutionize Pakistan’s Land Management

In the heart of Pakistan’s bustling cities and sprawling rural landscapes lies a persistent challenge that has long stifled economic growth and social stability: land management. For decades, the system has been mired in inefficiencies, corruption, and outdated colonial-era practices, leaving millions frustrated and the nation’s economic potential untapped.

Efforts to modernize this convoluted system have been made, notably through initiatives like Punjab’s Land Record Management Information System (LRMIS). However, these efforts remain fragmented and limited in scope. The slow pace of digitization and the continued reliance on manual records mean that the full benefits of these initiatives are yet to be realized. According to a recent article in The Express Tribune, the ongoing challenges in land ownership and management are a significant hurdle to Pakistan’s progress.

Blockchain: A Beacon of Hope

Amidst these challenges, blockchain technology emerges as a promising solution. Known for its secure and transparent nature, blockchain could revolutionize land management in Pakistan by ensuring clear and immutable land titles. This technology has the potential to reduce corruption and fraudulent transactions, offering a path towards a more efficient and equitable system.

International examples provide a blueprint for success. Georgia’s blockchain land registry, for instance, has been hailed as a triumph in transparency and fraud prevention. By storing land records on an immutable digital ledger, Georgia has effectively eliminated disputes arising from document manipulation. This model offers valuable lessons for Pakistan, highlighting the transformative potential of blockchain in governance.

Challenges and the Road Ahead

Despite its promise, implementing blockchain in Pakistan is not without challenges. Political resistance, bureaucratic inertia, and a lack of technical expertise pose significant hurdles. Moreover, vested interests benefiting from the current system’s opacity are likely to oppose such reforms. Overcoming these obstacles will require strong political will and collaboration across federal and provincial levels.

To pave the way for blockchain adoption, Pakistan must first consolidate existing digitization efforts, integrating provincial databases into a unified national platform. Legislation should standardize land valuation methods, eliminating disparities and closing loopholes that allow tax evasion. Public awareness campaigns will also be crucial in ensuring widespread adoption and trust in the new system.

Conclusion: A Call for Urgent Reform

The inefficiencies in Pakistan’s land management system have persisted for too long, hindering economic growth and legal transparency. However, with the right reforms and the adoption of cutting-edge technology, Pakistan has the potential to revolutionize its land administration. By learning from global examples and embracing blockchain’s capabilities, the country can unlock billions in economic value, boost investor confidence, and strengthen tax revenues.

The need for reform is urgent — Pakistan cannot afford to let its land management crisis continue unchecked. As highlighted in The Express Tribune, the time for action is now.

Ocean City Council Enacts New Short-Term Rental Restrictions

Ocean City, Md. – In a decisive move, the Ocean City Council has approved new restrictions on short-term rentals, despite opposition from over 200 residents. The council’s decision came after a comprehensive review of community concerns and potential impacts on local neighborhoods.

Occupancy Limits Enforced

The newly approved occupancy proposal limits the number of guests in rental units to two people per bedroom, plus an additional two occupants. Significantly, children under 10 years old are not counted in this total. The ordinance also prohibits the conversion of attics, garages, and other non-bedroom spaces into bedrooms unless they comply with town permitting requirements. This measure aims to maintain the integrity of residential areas and align with the town’s noise ordinance, adjusting the overnight accommodation period to midnight through 7 a.m.

Minimum Stay Requirement

A second proposal, which establishes a five-night minimum stay for rentals in R-1 and MH zoning districts, passed its first reading with a 5-2 vote. This proposal is set for a second reading for final approval. Realtor Terry Miller, who spearheaded opposition with approximately 200 signatures, argued that this policy could drastically reduce rental income during the summer months, as the national average stay is just 3.41 days. However, Mayor Rick Meehan defended the measure, emphasizing the need to preserve the character and tranquility of residential neighborhoods.

Moratorium on New Licenses

Adding to the regulatory changes, the council has enacted an 11-month moratorium on new short-term rental licenses in the R-1 and MH districts. This moratorium is effective immediately but does not affect applications submitted before January 28, 2025. Property owners with existing rental licenses can apply for renewal and supplementary short-term rental licenses for the 2025 license year.

The original article detailing these developments can be accessed here. This decision by the Ocean City Council marks a significant shift in local policy, aimed at balancing rental activity with community interests. The minimum stay proposal awaits further deliberation in the upcoming council meeting.

Redefining D.C.: A City of Dual Perceptions and Meaningful Work

Washington, D.C., a city often labeled as a “swamp,” is simultaneously a beacon for those driven by a sense of mission and purpose. While the term “swamp” has been used pejoratively to describe the political landscape of the capital, many who reside and work there see it as a place where significant contributions to society are made. This dual perception is explored in an insightful NPR article by Brian Naylor.

From the early days of its establishment, Washington has been criticized. Timothy Noah, a writer and journalist, notes that even before the city was built, it was described as a “political hive” by Thomas Treadwell, an Anti-Federalist senator. Yet, this view overlooks the “other” Washington, where residents and workers strive to make a positive impact.

In this “other” Washington, the National Institutes of Health in Bethesda, Maryland, played a crucial role in developing the blueprint for the COVID-19 vaccine. According to Noah, this breakthrough was not the result of private companies but rather the work of dedicated government researchers.

Federal agencies are involved in a wide array of functions, from managing the National Parks to processing tax returns and launching telescopes into deep space. Eric Choy, a leader in Customs and Border Protection, is committed to stopping the import of goods produced by forced labor, driven by a sense of duty to uphold national values.

Choy, who was honored with the Service to America Medal, emphasizes the importance of service to something greater than oneself. His perspective is shared by others like Christy Delafield of FHI 360, who moved to D.C. to contribute to global health and humanitarian aid systems.

Ryan O’Toole, a congressional staffer, reflects on his experiences in Washington, including being in the House chamber during the January 6th Capitol riot. He witnessed Senate staffers safeguarding the electoral college votes, actions he describes as heroic.

Despite its reputation, Washington is a vibrant and diverse community. While some may come seeking fame or fortune, many more are motivated by the desire to do good, as highlighted in Naylor’s article. This narrative challenges the notion of D.C. as merely a swamp, portraying it instead as a place where meaningful work is pursued.

For more insights on the history and dynamics of Washington, D.C., explore this National Mall history.

Addressing America’s Housing Crisis: Efforts and Challenges under the Biden Administration

The American housing crisis is not merely a statistic; it is a pressing reality affecting millions of families nationwide. Under the Biden administration, rent prices have soared to unprecedented levels, and homeownership has become an elusive dream for many. Over the past four years, the administration has taken several steps to address this entrenched issue. Let us delve into these measures and assess their effectiveness while casting light on the current state of housing in the United States.

Federal Investment and Housing Initiatives

The Biden administration has made significant federal investments in affordable housing. Billions have been allocated in grants and funding to enhance the availability of affordable housing, with a goal to build 2 million new homes, reduce rental costs, and offer tax credits for homebuyers.

Moreover, the administration expanded the Low-Income Housing Tax Credit (LIHTC) and proposed a Neighborhood Homes Tax Credit. Despite these efforts, the U.S. still faces a 4.5 million home shortage, underscoring the critical nature of the crisis.

Homelessness and Federal Response

While funding for homelessness prevention increased, the nation witnessed an 18% rise in homelessness in 2024. The lack of affordable units has significantly contributed to this surge. The National Alliance to End Homelessness reports that approximately 700,000 individuals are experiencing homelessness in the U.S.

Challenges in Addressing Housing Issues

Efforts to tackle the housing crisis have encountered substantial obstacles. Bipartisan cooperation is essential for sustainable solutions, yet political division remains a significant barrier. Additionally, high mortgage rates and supply shortages continue to impede progress.

Protecting Renters and Curbing Corporate Practices

The administration has taken measures to protect renters from unfair practices. It cracked down on corporate landlords exploiting algorithms to inflate rents and proposed capping rent increases at 5% for properties built with federal tax credits. The introduction of a Renters Bill of Rights further outlines principles for fair rental markets and prohibits hidden fees in rental agreements.

Looking Ahead

Although the Biden administration has laid the groundwork for addressing the housing crisis, much work remains. Future policies must focus on increasing supply, reducing costs, and protecting vulnerable populations. Only then can we hope to see real progress in making housing affordable for all.

The original article from Norada Real Estate Investments provides an in-depth analysis of these initiatives and the ongoing challenges in the housing sector.

The Future of Real Estate: Technology’s Transformative Impact by 2025

In a rapidly evolving world, technology is set to redefine the real estate landscape by 2025. As reported by AZ Big Media, several cutting-edge technologies are poised to revolutionize how properties are bought, sold, rented, and managed. From artificial intelligence (AI) and blockchain to virtual reality (VR) and data analytics, these innovations are reshaping the expectations of consumers and intensifying competition among real estate businesses.

AI-Driven Property Recommendations

AI is set to become the cornerstone of property searches, offering personalized recommendations based on user preferences such as budget, location, and lifestyle. John Beebe, CEO and Founder of Classic Car Deals, highlights that AI algorithms will employ predictive analytics to identify valuable assets and forecast market conditions, streamlining the property search process for buyers and renters.

Blockchain for Transparent Transactions

The integration of blockchain technology promises enhanced security and transparency in real estate transactions. Dr. Nick Oberheiden, Founder of Oberheiden P.C., notes that smart contracts will automate agreements, eliminating intermediaries and reducing transaction costs. Blockchain will also facilitate fractional ownership, opening new investment opportunities.

Virtual Reality for Property Tours

Virtual reality is transforming property marketing by allowing potential buyers to tour homes remotely. Gerrid Smith, Founder & CEO of Fortress Growth, emphasizes that VR technology will offer hyper-realistic experiences, enabling international shoppers to explore properties without traveling.

Big Data for Market Insights

Big data platforms will provide valuable market insights, helping stakeholders make informed decisions. Sam Hodgson of ISA.co.uk explains that predictive analytics will highlight market trends and property appreciation rates, benefiting buyers and sellers alike.

IoT-Enabled Smart Homes

The Internet of Things (IoT) will integrate advanced solutions into homes, from energy-efficient systems to community-level innovations. Alex L. of StudyX anticipates that these developments will appeal to environmentally conscious consumers seeking sustainable living options.

Digital Twins for Property Development

Digital twins, or virtual replicas of physical structures, will become mainstream by 2025. Ivy Berezo of LUCAS PRODUCTS & SERVICES highlights that this technology will enhance accuracy and efficiency in property development, allowing real-time collaboration across geographical boundaries.

Enhanced Marketing with AR and AI

Augmented reality (AR) and AI will revolutionize property marketing by offering interactive experiences. Leonidas Sfyris of Need a Fixer notes that AR apps will allow buyers to visualize renovations, while AI chatbots will provide instant answers to inquiries.

Sustainable Real Estate Practices

Technology will drive sustainability in real estate, with AI and IoT enabling energy-efficient designs. Deborah Kelly of Brickhunter explains that integrated systems will optimize resource consumption, appealing to eco-conscious buyers.

Remote Work Influencing Location Choices

The rise of remote work will shift property preferences, with demand for homes offering dedicated workspaces and internet capabilities. Gemma Hughes of iGrafx suggests that developers should cater to these trends by incorporating flexible workspaces into residential complexes.

Frictionless Transactions Through Digital Platforms

Digital platforms will streamline real estate transactions, from virtual tours to e-signing documents. Dean Lee of Sealions predicts that blockchain and AI will enhance transaction efficiency, setting a new standard for smart real estate practices.

As we look to the future, these technological advancements will drive significant changes in the real estate market by 2025. Industry stakeholders must adapt to these innovations to remain competitive and meet the evolving demands of consumers.

India’s Real Estate Revolution: Growth, Opportunities, and Technological Transformations

India, poised to emerge as a global power, now sees its real estate industry as a crucial indicator of economic health, playing a vital role in the nation’s development pathway. The motto “Roti, Kapda, Makaan” emphasizes the essential Indian dream, wherein a ‘Makaan’ or home symbolizes security and prosperity. This dream is propelling the real estate market to unprecedented levels.

Chintan Vasani, a Founder Partner at Wisebiz Developers, underscores the importance of acquiring essential skills for success in this burgeoning sector.

Impact of COVID-19 on Housing Preferences

The COVID-19 pandemic has drastically shifted perspectives, motivating people to re-evaluate their housing needs. With homes evolving into workspaces, learning centers, and sanctuaries, the preference for renting diminished. This pivot, accentuated by historically low interest rates, has significantly boosted homeownership, a trend anticipated to endure.Recognizing the sector’s potential, the Indian government has initiated policies to support and expand it. The Real Estate (Regulation and Development) Act (RERA) has introduced critical transparency and accountability, empowering buyers and instilling market confidence. Moreover, government focus on affordable housing and infrastructure development is opening fresh avenues for real estate stakeholders.

Technological Progressions

The advent of technology is also reshaping the real estate landscape. The incorporation of Proptech—blending property and technology—is revolutionizing industry interactions. Tools like virtual tours, online listings, and digital payments are now commonplace.Despite challenges, India’s real estate future looks promising. A growing middle class, rapid urbanization, and increasing disposable incomes are key growth drivers. Pursuing a career in this sector provides diverse opportunities and can be highly rewarding, ranging from property brokerage and sales to project management and real estate law.

With the industry’s expansion, skilled professionals are in high demand. Those possessing a nuanced understanding of market dynamics alongside strong interpersonal skills are likely to excel.

In summary, the real estate sector is pivotal to India’s development. Its ongoing growth aligns with the aspirations of millions of Indians. As the industry persists in evolving—buoyed by technological advances and government initiatives—it offers a compelling career proposition. Individuals equipped with passion, expertise, and strategic insight can achieve significant success in this dynamic field.

For more insights, you may refer to the original article on India Today.

Top Real Estate Investment Trends for 2025: Expert Insights

As the real estate landscape evolves, strategic investments can make all the difference for stakeholders aiming to maximize returns. Johan Hajji, Cofounder at UpperKey, shares his insights into pivotal trends expected to shape property investment in 2025. Passionate about property management, real estate investments, proptech, and business growth, Johan outlines the key areas that investors should focus on to stay ahead.

Property investors face a rapidly changing market environment, influenced by rising costs, technological advancements, and altered housing preferences. As we approach 2025, it’s crucial to track trends that are likely to impact the property sector significantly.

1. Booming Interest in Smaller Cities

Emerging as new investment hotspots, cities like Boise, Charlotte, and Tampa are experiencing rapid growth due to the increasing popularity of remote work. This trend allows more people to relocate from traditionally expensive cities like New York and San Francisco to places offering a lower cost of living. Investors might discover greater potential in these smaller markets, where property prices are competitive and growth prospects abound. For further insights, explore the discussion on city affordability.

2. Rising Demand for Green Buildings

Sustainability is at the forefront for real estate investments. Eco-friendly buildings that minimize energy use are gaining traction not only for their environmental benefits but also for their appeal to cost-conscious tenants. Governments are also incentivizing sustainable construction through tax breaks, heralding a promising avenue for investors. Learn more about sustainable housing preferences here.

3. A Surge in Renting Patterns

With ongoing increases in home prices, renting has become a preferable option for many. This has catalyzed the rise of build-to-rent (BTR) communities, which provide long-term rental solutions for those not inclined to purchase property. These developments commonly feature shared amenities and appeal to renters seeking elevated living standards. The growth of BTR properties is examined here.

4. Transformation Through Property Technology

The integration of technology in property transactions and management is revolutionizing the sector. Tools like AI optimize tenant management and maintenance scheduling, while blockchain enhances transaction speed and security. For comprehensive insights, consider how technology impacts property markets.

5. Navigating Interest Rates and Inflation

Interest rate fluctuations and inflation remain critical influencers of real estate valuations. While high rates can dampen market activity by making borrowing costly, real estate is a strong hedge against inflation due to concurrent rental price increases. For a deeper understanding, review how interest rates affect economic conditions.

6. The Growing Appeal for Affordable Housing

The demand for affordable housing is surging, prompting public-private partnerships to fulfill this critical need. This sector offers substantial returns alongside social benefits, complemented by attractive tax incentives for investors participating in affordable housing projects. Learn more about affordable housing initiatives.

As outlined by Johan, staying informed of these real estate trends and adapting strategies accordingly will be fundamental for investors aiming for success and maximizing returns in the competitive landscape of 2025. The full potential of these evolving trends promises not only personal gains but also broad societal benefits.

For additional opportunities and insights, visit the Forbes Business Council.

The Role of Zoning Regulations in the Housing Affordability Crisis

As the nation confronts the ongoing housing affordability crisis, a key focus has emerged on the role of zoning regulations in either hindering or promoting the construction of affordable housing. These regulations, which dictate land use and building specifics, have come under scrutiny for their potential to either restrict or facilitate housing production.

Zoning laws, historically rooted in early 20th-century urbanization efforts, have evolved significantly since New York City’s pioneering ordinance in 1916. This landmark regulation sought to manage urban density and building heights, setting a precedent for what would become known as Euclidean Zoning. This form of zoning, legitimized by the U.S. Supreme Court in the 1926 case Village of Euclid v. Ambler Realty Co., remains the most prevalent type in the United States.

However, the intricacies of zoning laws often create barriers to housing development. Common obstacles such as minimum lot sizes, height restrictions, and parking requirements can limit the supply of affordable units. Conversely, incentives like density bonuses and streamlined approval processes can encourage developers to build more accessible housing.

Inclusionary Zoning: A Double-Edged Sword

One strategy, Inclusionary Zoning (IZ), mandates that a portion of new developments include affordable units. However, this approach can inadvertently increase costs for market-rate units and reduce overall housing production. A study on Los Angeles’s Transit-Orientated Communities program revealed that a 20% IZ requirement could slash new housing production by nearly half.

Similarly, a 2019 report from the Mercatus Center highlighted that IZ often fails to significantly boost real housing supply, with minimal impact on multifamily starts and a decrease in single-family starts.

Innovative Approaches to Overcome Zoning Barriers

Cities like Salt Lake City and Minneapolis are pioneering efforts to overcome zoning barriers. Salt Lake City, for instance, allows missing middle housing types in areas traditionally zoned for single-family homes, exempting them from certain lot requirements. Minneapolis has seen a 45% increase in permits for 2-4 unit buildings due to reduced parking mandates.

On a broader scale, states like California and New York are implementing policies to pre-empt local zoning laws that restrict housing supply. California’s SB 9 and SB 10 enable duplexes and small multifamily developments in single-family zones, while New York’s initiatives aim to increase density near transit hubs.

Looking Ahead

As policymakers strive to create a more equitable housing landscape, the challenge lies in crafting zoning laws that balance density with livability. Thoughtful zoning reforms, coupled with incentives for developers, can significantly enhance affordable housing efforts. By embracing innovative approaches and fostering public-private partnerships, we can work towards a future where housing is accessible for all.

For further insights, explore the original article from the National Association of Home Builders, which delves deeper into the complexities of zoning and housing affordability.

Real Estate Investment Outlook for 2024: Key Cities and Market Insights

Market Trends

The U.S. housing market remains robust, driven by strong buyer demand despite rising interest rates. A notable trend is the resilience of single-family rentals, particularly with the shift towards build-to-rent projects that address affordability constraints in homeownership.Key Cities for Investment

- Boise, Idaho: Praised for its strong job market and affordable housing, Boise is a top contender for real estate investors.

- Houston, Texas: Known for its robust real estate market, driven by a thriving job sector.

- Dallas, Texas: Offers diverse real estate opportunities with a rapidly growing population and economy.

- Las Vegas, Nevada: Recognized for its high rental demand paired with affordable housing.

- Atlanta, Georgia: Noted for its low cost of living and strong real estate market fundamentals.

Emerging Markets and Strategies

Investors are advised to consider factors such as rental occupancy rates, local economic conditions, and real estate appreciation potential when choosing locations.Conclusion and Advice

As the article from Norada Real Estate Investments emphasizes, due diligence and understanding local market dynamics are crucial for profitable real estate investments in 2024.Foreign Investment Insights

Foreign buyers continue to play a significant role in the U.S. real estate market. The latest data from the National Association of Realtors highlights ongoing international investment trends, underscoring the global appeal of U.S. real estate.For those considering real estate investments in 2024, this comprehensive guide serves as a critical resource in identifying potential lucrative markets across the United States. To explore more about these prime locations, visit the Norada Real Estate Blog.

Key Themes in Commercial Real Estate for 2025: A Tentative Revival

As we step into 2025, the commercial real estate (CRE) sector is poised for a tentative revival, following a year of transition in 2024. According to a recent report by Oxford Economics, five key themes are expected to shape the industry’s outlook, offering both opportunities and challenges for investors and market participants.

Global Economic and Interest Rate Dynamics

The global economy is projected to experience moderate growth, coupled with a continuation of interest rate cuts. However, geopolitical uncertainties may add complexities to this landscape. The shift towards a more protectionist global economy is likely to redefine trade, price stability, and investment strategies, influencing the CRE sector’s trajectory.

Capital Value Growth Prospects

Despite ongoing policy rate cuts, long-term bond yields are expected to remain below pre-pandemic levels, limiting the potential for real estate yield compression. As such, capital value growth may be tempered, requiring investors to adopt a cautious yet strategic approach to maximize returns.

Regional and Sector-Specific Investment Opportunities

Oxford Economics highlights that the next 12 to 18 months present a favorable window for direct real estate investments in specific regions and sectors. This period is anticipated to offer the most advantageous entry point in the current cycle, encouraging investors to explore diverse opportunities across the global market.

Rebound in CRE Transaction Volumes

Global CRE transaction volumes have reached near-decade lows, but emerging trends suggest a potential resurgence. As trust in the market begins to rebuild, a convergence of powerful trends is expected to ignite a strong rebound in transaction volumes, providing a renewed sense of optimism for the industry.

Interest in Alternative and Niche Sectors

Alternative and niche sectors such as student housing, seniors housing, healthcare, and data centers continue to attract global investor interest. While these sectors offer promising opportunities, balancing strategies against inherent risks and countervailing structural forces will be critical for optimizing returns in 2025 and beyond.

For more detailed insights and forecasts, download the full report from Oxford Economics or register for the upcoming webinar to gain a deeper understanding of the factors driving this tentative rebound in CRE values.

Stay informed and prepared as the CRE sector navigates this pivotal year, leveraging the insights and analyses provided by industry experts to make informed investment decisions.

Real Estate Crowdfunding: A New Frontier for Investors

Real Estate Crowdfunding: A New Frontier for Investors

The landscape of real estate investing is undergoing a remarkable transformation, thanks to the rise of real estate crowdfunding platforms. As highlighted in a recent NerdWallet article, these platforms are democratizing access to real estate investments, once the exclusive domain of affluent investors.Lower Barriers, Higher Potential Returns

One of the most compelling aspects of these platforms is their ability to lower the barriers to entry. With low account minimums and reasonable fee structures, they provide an opportunity for average investors to diversify their portfolios with real estate. Unlike traditional REITs, which are accessible through brokerages, real estate crowdfunding allows participation in private REITs and individual property investments. This could potentially offer higher returns, albeit with increased risk.Diverse Investment Opportunities

These platforms don’t just stop at real estate. As the article notes, some also offer investments in venture capital, private equity, and even collectables like art. This diversification is appealing to investors looking to broaden their investment horizons.The Best Platforms of February 2025

NerdWallet’s article provides a detailed evaluation of the top real estate crowdfunding platforms available in February 2025. The criteria for selection include account minimums, customer support, redemption options, and fees. This comprehensive analysis helps investors make informed decisions about where to allocate their funds.For those interested in exploring the full range of options, the original article offers an in-depth look at this growing trend. Real estate crowdfunding is poised to become a popular investment vehicle, offering a new way for everyday investors to engage with the real estate market.

A 22-Year-Old’s Journey to $103K in Real Estate

Okay, let’s be real—who wouldn’t want to make six figures in their first year on the job? That’s the kind of success story that makes you sit up and go, Wait, what? How?!

So, meet Anna, a now 26-year-old real estate agent and mortgage loan originator, who’s sharing the ups, downs, and bank account-changing experiences of her first year in the real estate world. And let me tell you, it’s not as simple as just slapping a For Sale sign on a mansion and collecting a bag of cash.

The Journey from College Student to Six-Figure Realtor

Anna got her real estate license at the end of 2017 and officially entered the big leagues in 2018. But instead of starting from scratch, she smartly positioned herself with a top-producing luxury real estate team in Orange County. That move alone gave her early exposure, experience, and—most importantly—leads.

Like many fresh-faced agents, she transitioned off a paid internship into a commission-based role, where her first major sale—an $800,000 condo—landed her a check for $10,120. Not bad for a first deal, right? But before you start writing your resignation letter to become a real estate mogul overnight, let’s break down where the rest of that commission went.

See, in real estate, it’s not just you cashing in that big payday. Brokers, teams, and splits take their cuts, meaning Anna was only pocketing half of what the total commission for that deal actually was. And that’s just a small taste of Reality Check #1 in this profession: You don’t keep everything you earn.

The Harsh Lessons of Being a Real Estate Newbie

After the high of that first deal, Anna hit a dry spell—a struggle that many first-year agents face. Finding clients was rough, and she even had a $1.9 million sale completely vanish when the buyer went behind her back and worked directly with the listing agent. Talk about betrayal! Lesson learned: If you don’t lock in a client agreement, you’re leaving a lot up to chance.

At this point, her one big check from earlier wasn’t going to pay the bills indefinitely (even though, props to her, she stretched that $10K like a budgeting queen). That’s when she decided to pivot.

Switching Gears: Salary + Commission = Stability

Realizing that feast-or-famine income wasn’t for her, Anna discovered a real estate startup that offered a $5,000 monthly salary—yes, steady money—plus commissions on any closings she landed. This gave her the best of both worlds: guaranteed money hitting the bank account each month while still racking up real estate deals.

By structuring her income this way, Anna was closing four to five homes a month for the remainder of 2018. While her commissions weren’t as high as traditional real estate gigs, her new model brought consistent income without the stress of dealing-to-dealing survival. By the end of the year, she had pulled in a grand total of $103,000.

Not bad for year one!

What We Can Learn from Anna’s Real Estate Grind

Anna’s story is not just about making a lot of money—it’s about how she made it. And more importantly, what lessons aspiring realtors (or anyone, really) can take from her journey:

- Don’t rely on just one client. That $1.9M sale that went poof taught Anna a valuable lesson: Diversify your leads and always have multiple deals in motion.

- Look for alternative ways to earn money in real estate. It’s not just about million-dollar home sales—rental deals, team splits, and different payment models like salary-based real estate roles can all stack up to a serious paycheck.

- Your first paycheck might be big, but it won’t last forever. Anna stretched her first $10K commission like a pro, but she quickly realized that consistent income beats sporadic windfall commissions.

- Getting burned is part of the industry. Losing deals, backstabbing clients, and navigating brokerage splits are all part of the game—what matters is how you adapt.

Is Real Estate Worth It?

Honestly, Anna’s first-year earnings are way above the norm for new real estate agents. Most struggle to hit just half of what she made. A ton of realtors don’t even close a deal in their first year. But Anna put herself in the right position—starting under an experienced team, finding alternate income sources, and recognizing when a steady paycheck was the smarter move.

So, is jumping into real estate a guaranteed golden ticket? Nope. But with the right strategy and relentless drive (seriously, this girl hustled), you can make it work.

What do you think—would you take the risk to chase commissions, or do you prefer the stability of a monthly paycheck? Let’s talk about it. Drop your thoughts in the comments! 🚀

The Ultimate Real Estate Exam Cheat Sheet: My Reaction to Maggie’s Top 20 Terms

Alright, future real estate moguls, you’re in for a ride because I just watched Maggie break down the top 20 must-know terms for the real estate exam, and let me tell you—it was like getting hit with a knowledge bomb (in the best way possible). Let’s dive in!

First Impression: So Many Terms, So Little Brain Space

So, Maggie jumps right in, no fluff, no small talk—just straight-up essential real estate knowledge. If you’re about to take your real estate exam and you don’t know about “deed restrictions” or the “Maria Test,” well, buckle up, because this video is basically your survival guide.

I’ve always thought of real estate as glamorous—you know, selling million-dollar homes, negotiating like a shark, closing deals over coffee. But turns out, there are a ton of rules, legalities, and downright scary terms that you have to understand before you even get close to your first transaction.

Let’s Talk About A Few Terms That Blew My Mind

1. Deed Restrictions – So You Can’t Just Go Wild with a House?!

So, apparently, if you buy a home with deed restrictions, you can’t just do whatever you want with it. Want to paint it bright neon green? Nope. Thinking of building a pirate ship in your front yard? Again, no. These restrictions are put in place by developers or homeowners associations (HOAs) to keep the neighborhood looking a certain way. Honestly, I could see myself forgetting about this and buying a home, only to find out I can’t add a massive slide from the roof to the backyard. Tragic.

2. The Maria Test – Not a Person, But Definitely Important

No, “Maria” isn’t Maggie’s bestie giving us exam tips—it’s actually an acronym (Method, Adaptability, Relationship, Intention, Agreement) to determine if something in a house is a fixture or personal property. Basically, if it’s bolted, screwed in, or clearly meant to stay, it’s part of the sale. If not, it’s up in the air. Imagine buying a house thinking you’re getting a fancy chandelier, only for the sellers to take it when they leave. The Maria Test will save you from heartbreak!

3. Non-Conforming Use – AKA, the Rebellious Buildings

If a small grocery store exists in a residential neighborhood just chillin’ between houses, that’s thanks to non-conforming use—basically, it’s “grandfathered in” because it was built before zoning laws changed. But once it shuts down, it has to conform to the new rules. It’s like your friend who was the only one allowed to wear sneakers in gym class because they had a doctor’s note.

4. Antitrust Regulations – No Price-Fixing Allowed, Folks!

Did you know it’s illegal for real estate brokers to agree on commission rates together? Yeah, it’s called price-fixing, and apparently, that’s a huge no-no (thanks, Sherman Act!). I love that Maggie emphasized this because, let’s be real, a lot of people probably assume all agents secretly agree to charge the same rates. Not today, capitalism says no.

Bigger Takeaways – This Stuff Really Matters

What this video made super clear is that real estate isn’t just about selling houses—it’s about laws, ethics, and avoiding lawsuits. Every term Maggie covered plays a huge role in how agents conduct business, protect homebuyers, and—most importantly—stay out of trouble.

For example, lead-based paint disclosure? That’s not just a nice suggestion; it’s a federal requirement. If you don’t tell a buyer that a 1970s house might contain lead paint, you could be facing some serious legal drama. The same with blockbusting and steering—practices that have a dark history in real estate and are now illegal due to the Fair Housing Act. It’s wild to think that unethical real estate tactics once shaped entire neighborhoods!

How This Relates to the Bigger Picture

Maggie’s breakdown reminded me of how understanding laws in any industry is critical, whether it’s real estate, finance, or even tech. This actually made me think of how big companies get fined millions of dollars for violating regulations they should’ve understood (looking at you, giant corporations). It’s the same for new real estate agents—know the rules, or you could lose your license before you even get started.

And let’s not forget the economic and functional obsolescence part—this section personally hurt my soul. Turns out, sometimes your home’s value drops for reasons completely out of your control, like a noisy freeway getting built next door (economic obsolescence), or because your house is stuck in the past, like having only one bathroom when modern homes have three (functional obsolescence). It’s like investing in Myspace stock—bad call.

Final Thoughts – This is the Cheat Code for Passing Your Exam

So if you’re preparing for your real estate exam, watch this video ASAP. Maggie somehow manages to make a firehose of information feel digestible, and you can tell she really knows her stuff. Plus, her breakdowns make it easier to remember these terms when crunch time hits and you’re staring at a multiple-choice question, sweating bullets.

And by the way, if you’re anything like me and need an extra push to absorb all of this, Maggie’s ebook “The Educated Agent” sounds like a solid investment. (Because let’s be real—we’ve all spent money on things way less useful, like that gym membership we swore we’d use.)

What do you think—are you feeling prepared for your real estate exam? Or did one of these terms totally throw you off? Let’s chat in the comments! 🚀