Humanity at the Crossroads: Ethical Implications of AI in Medicine

Humanity at the Crossroads: Ethical Implications of AI in Medicine

The integration of artificial intelligence (AI) in healthcare has ushered in a new era of medical advancements, but not without raising significant ethical concerns. As AI systems become more prevalent in fields like radiology, emergency medicine, and telehealth, the challenge lies in addressing fundamental issues such as patient consent, data privacy, and implicit biases that these technologies often overlook.Unraveling the Bias in Algorithms

AI’s promise in healthcare is undeniable, with its ability to uncover hidden disease patterns and predict illnesses. However, the reliance on historical data can exacerbate existing biases, particularly affecting marginalized communities such as the LGBTQIA+ and certain ethnic and racial groups. As highlighted in the article, addressing these biases during the initial implementation phase is crucial to enhancing outcomes more effectively than traditional methods.

Despite the importance of this issue, a study by du Toit et al. revealed that many peer-reviewed articles fail to address algorithm biases adequately. Out of 63 articles on hypertension, none tackled the bias issue, underscoring the need for healthcare and AI professionals to develop stringent measures to identify and rectify such biases.

Empowering Patients Through Informed Consent

Patient autonomy remains a cornerstone of medical ethics, especially in the AI era. Older patients with multiple chronic conditions often express skepticism toward AI-based modalities. An ethical AI system must ensure that these patients are informed about the benefits and have the option to opt out. Transparency in this process fosters trust and addresses concerns about data security and privacy.

Responsible Deployment of AI Technologies

Regulatory bodies like the U.S. Food and Drug Administration (FDA) play a pivotal role in the ethical deployment of AI in healthcare. Establishing comprehensive guidelines and conducting frequent assessments are essential to maintaining transparency and accountability. The article emphasizes the need for a dynamic regulatory framework to prevent AI misuse and ensure patient welfare.

Liability Concerns and AI Hallucinations

Liability concerns present a complex challenge in the event of adverse outcomes. While physicians hold primary responsibility for AI technology selection, manufacturers must also ensure product safety and efficacy. The emergence of AI-specific liability insurance offers a novel solution to managing malpractice claims.

Moreover, healthcare providers must address AI hallucinations—AI-generated misinformation not based on real data. Recognizing the limitations of AI and its inability to replace personalized care is crucial.

Emotions and the Ethical Use of AI in Healthcare

AI’s impact on patient emotions is significant. The introduction of AI-powered diagnostic tools can lead to increased patient distress if not communicated empathetically. Ensuring equity and fairness in AI algorithms is imperative to prevent biases that affect emotional well-being.

Preserving the empathetic human connection in an AI-driven healthcare landscape is paramount. While AI can streamline tasks, it cannot replace the irreplaceable role of human healthcare practitioners in addressing emotional needs.

As AI continues to transform healthcare, it is vital to prioritize ethical considerations. By actively engaging in ethical discussions, the healthcare industry can harness AI’s full potential to improve patient outcomes while upholding the values of medicine.

More Articles

Getting licensed or staying ahead in your career can be a journey—but it doesn’t have to be overwhelming. Grab your favorite coffee or tea, take a moment to relax, and browse through our articles. Whether you’re just starting out or renewing your expertise, we’ve got tips, insights, and advice to keep you moving forward. Here’s to your success—one sip and one step at a time!

Gulf of America: Politics in Geography

Gulf of America? Google Maps Just Got Political, and We’re Still Processing

You ever wake up, check the news, and immediately question if you’re still dreaming? That was me this morning when I saw this headline: The Gulf of Mexico has been renamed the Gulf of America. Excuse me? Come again?

Wait, What Just Happened?

So, here’s the deal. President Trump, on his first day back in office, signed an executive order renaming the Gulf of Mexico to the Gulf of America. (Because apparently, top priority.) Google Maps, not one to shy away from a government-approved change, hopped right on board and updated the name on their platform. They even posted on X (formerly Twitter) that they have a “longstanding practice” of adjusting names according to official sources.

Translation: “Hey, don’t blame us. We’re just following the paperwork.”

Oh, and here’s the kicker—if you’re in Mexico, it’s still the Gulf of Mexico. So, depending on where you’re standing, that body of water has two different names. International waters just got a whole lot pettier.

My Immediate Reaction?

I have so many questions—mostly why? Who woke up and thought: You know what needs fixing? Not the economy, not infrastructure, but the name of that big ol’ body of water.

Also, imagine being a geography teacher right now. Yesterday, they were explaining the Gulf of Mexico. Today, they’re rewriting all their lesson plans.

And let’s not even start on travelers planning spring break trips. Google Maps is out here casually rewriting borders without warning. Imagine trying to meet your friends on the beach and texting, “Just follow the Gulf of Mexico signs—wait, sorry, I mean America. I mean… I don’t even know anymore.”

Is This a Trend Now?

Honestly, this feels like a sequel to that time when Google Maps had Crimea switching names depending on who was looking at the map. One glance from Russia? Crimea. A peek from Ukraine? Nope, different name.

Naming disputes aren’t new. Countries have been playing tug-of-war with names for centuries—Sea of Japan vs. East Sea, anyone? But THIS? This is like renaming Lasagna to ‘Freedom Pasta’ and expecting everyone to just roll with it.

What’s Next?

- Are we renaming the Atlantic Ocean to the Freedom Pond?

- Will the Grand Canyon become the Patriot’s Trench?

- How far does this go?

Honestly, I need to hear from you—because I can’t be the only one feeling like we’ve entered some alternate reality. Do you think this change actually matters? Are you calling it the Gulf of America now? Or will you be forever loyal to “Mexico” like an old-school map purist?

Drop your thoughts (or complaints) in the non-renamed comment section—because at this rate, even that might not be safe.

Commercial Real Estate Market: A $384.46 Billion Opportunity

Commercial Real Estate Market: A $384.46 Billion Opportunity

The global commercial real estate market is on the brink of a substantial transformation, projected to grow by USD 384.46 billion from 2024 to 2028. According to a recent report by Technavio, this growth is driven by the expanding commercial sector worldwide, with a compound annual growth rate (CAGR) of 4.36%. However, the shift towards remote work and the rise of e-commerce present significant challenges.

Key Market Players

The market landscape is fragmented with key players such as Atlas Technical Consultants LLC, Boston Commercial Properties Inc., and CBRE Group Inc. These companies are leveraging integrated marketing communication strategies, utilizing channels like newspapers, magazines, and social media to enhance customer engagement and drive sales.Emerging Trends

Significant trends are reshaping the market. The demand for larger distribution centers is fueled by e-commerce, while reshoring in manufacturing is increasing the need for industrial spaces. The office sector is evolving with flexible work arrangements and a focus on health and safety. Additionally, the logistics sector is experiencing a surge in demand due to the rise in e-commerce sales.Challenges Ahead

The commercial real estate market faces hurdles, particularly from the shift towards online shopping and remote work. Traditional retail spaces and office buildings are seeing decreased demand. Businesses are adapting by incorporating co-working spaces and flexible workspaces, challenging conventional real estate models.Technological Impact

Technology plays a pivotal role in this transformation. The adoption of smart buildings, coworking spaces, and energy-efficient solutions is becoming increasingly important. The use of AI and IT solutions, such as virtual property tours and online leasing platforms, is revolutionizing how properties are marketed and managed.For more detailed insights, you can view the full report or explore a free sample PDF.

Conclusion

The commercial real estate market is dynamic and complex, requiring businesses to adapt swiftly to align with evolving trends and technological advancements. As noted in the original article, the integration of AI and technology is crucial in navigating these changes and capitalizing on new opportunities.Harnessing the Power of Marketing Certifications in 2025

In the ever-evolving landscape of 2025, marketing professionals are increasingly turning to certifications as a means to stay ahead of the curve and gain a competitive edge. With rapid advancements in technology and shifts in consumer behavior, these certifications have become a vital tool for marketers seeking to validate and expand their skill sets.

According to a recent article from TechTarget, certifications in areas such as AI, data analytics, and digital innovation are proving invaluable. These credentials not only differentiate professionals but also equip them with the tools needed to bring substantial value to both their teams and customers.

Why Marketing Certifications Matter

Marketing certifications serve as a testament to a professional’s expertise in specific areas such as social media marketing, SEO, and digital analytics. They provide a competitive edge in job applications and foster career growth by opening doors to new opportunities.

In 2025, the commitment to continuous education through certifications is crucial. It signals a willingness to invest time in professional development, staying abreast of industry trends and best practices, which is a blueprint for success.

Free vs. Paid Marketing Certifications

When choosing between free and paid marketing certifications, professionals must consider their personal goals, time, and budget. Paid certifications generally offer more comprehensive education with structured learning materials and professional feedback. They often come from recognized institutions and may require tests or projects to demonstrate proficiency.

Conversely, free certifications, while lacking the depth of paid options, are more accessible. They provide a flexible, self-paced learning experience through online tutorials and resources. Both paths offer valuable opportunities to enhance marketing knowledge and career potential.

Top Certifications and Courses for 2025

- AI in Marketing:

- AI for Business and Marketing – Offered by the Digital Marketing Institute, this course provides a comprehensive introduction to AI tools and strategies. Price: $535

- AI for Marketing – A free course by HubSpot Academy, covering AI integration in marketing efforts.

- Advanced SEO Courses:

- Google SEO Fundamentals – Available via Coursera, this self-paced program covers essential SEO topics. Price: Requires Coursera subscription

- Semrush Academy – Free SEO courses focusing on keyword research and digital advertising, offered by Semrush Academy.

- Digital Marketing Innovations:

- Meta Certifications – Validate expertise in platforms like Facebook and Instagram. Price: $99-$150 per exam. More info at Meta Blueprint.

- Google Ads Search Certification – Free course on Skillshop, focusing on search ad campaigns.

As we delve deeper into 2025, the importance of marketing certifications continues to grow. They not only enhance individual skill sets but also provide a strategic advantage in a competitive industry landscape. For more insights, visit the original article on TechTarget.

Seismic Shifts in Global Economy Amidst US Tariff Threats

The global economic landscape is undergoing a seismic shift as countries brace for potential US tariffs. Since the dawn of the Trump Administration in 2017, the US share of global trade has been on the decline, even as its GDP share has risen. This paradox is driven by robust US economic growth and soaring equity valuations, reflecting investor confidence in American innovation.

However, the world is not standing still. Non-US trade is flourishing, with countries actively signing new trade agreements to reduce reliance on the US. The European Union, for instance, has recently finalized deals with South American nations and is in talks with Australia and Indonesia, as reported by FT. Meanwhile, China is pivoting towards Asia-Pacific partnerships and engaging with Latin American countries.

The US Economy Remains Strong

Despite these global shifts, the US economy continues to show resilience. According to recent data from the Bureau of Economic Analysis, the US GDP grew by 2.8% in 2024, driven by a surge in consumer spending, particularly on durable goods.

Yet, there are signs of caution. Business investment fell, and trade made a negative contribution to GDP growth in the fourth quarter. The looming threat of tariffs could further complicate matters, potentially leading to higher consumer prices and impacting export growth.

US Federal Reserve Keeps Policy Unchanged

The Federal Reserve, as expected, has kept interest rates steady. In a recent press conference, Fed Chair Powell highlighted that while the labor market has cooled, inflation remains “elevated.” This cautious stance led to a drop in equity prices and a rise in bond yields, reflecting investor sentiment.

Eurozone Economy Stagnates While ECB Cuts Rates

Across the Atlantic, the Eurozone economy is facing stagnation. Recent reports from Eurostat show no growth in the fourth quarter of 2024. The European Central Bank (ECB) responded by cutting interest rates, aiming to boost activity amid ongoing economic headwinds.

ECB President Lagarde noted that while manufacturing is weak, services remain strong. However, potential US tariffs pose a risk, potentially impacting the Eurozone’s growth trajectory.

As the global economic narrative unfolds, the world watches closely, anticipating the US’s next move on tariffs and its ripple effects on global trade and economic stability.

Urban Resurgence: The Return of Homebuyers to the City

Urban Resurgence: The Return of Homebuyers to the City

In a striking reversal of pandemic-era trends, homebuyers are once again flocking back to urban centers after a brief suburban exodus. This shift is detailed in a recent report by the National Association of Realtors (NAR), which highlights emerging patterns in the housing market.

During the height of the COVID-19 pandemic, wealthy urbanites from cities like New York City and San Francisco sought refuge in rural areas and small towns, leading many to speculate the demise of urban living. However, data from NAR reveals a different narrative.

Between 2017 and 2021, the share of home purchases in rural areas and small towns remained consistent. Yet, 2022 saw a notable increase, with rural areas capturing 19% and small towns 29% of the market. This surge came at the expense of suburban areas, which saw their share drop from a steady 50% to 39%.

Fast forward to 2024, and the tide has turned once more. Suburban home purchases have rebounded to 45%, while urban centers have surged to 16%, surpassing pre-pandemic levels. Meanwhile, rural and small-town purchases have returned to their typical shares of 14% and 23%, respectively.

“When bad things happen in New York, pundits often say it’s the end of the city,” notes the article from HousingWire. Yet, many New Yorkers simply relocated within the city, moving from Manhattan to Brooklyn, rather than leaving entirely.

San Francisco, with its tech-heavy population, has been slower to recover due to the prevalence of remote work. However, as major tech firms call employees back to the office, the urban housing market shows signs of revival.

Additional insights from the NAR report indicate a return to shorter migration distances, with the median dropping from 50 miles in 2022 back to 20 miles. The age of homes being purchased has also stabilized, with the typical home now being built in 1994, aligning with pre-pandemic trends.

AI’s Pervasive Influence in Real Estate: A Transformative Shift

AI’s Pervasive Influence in Real Estate: A Transformative Shift

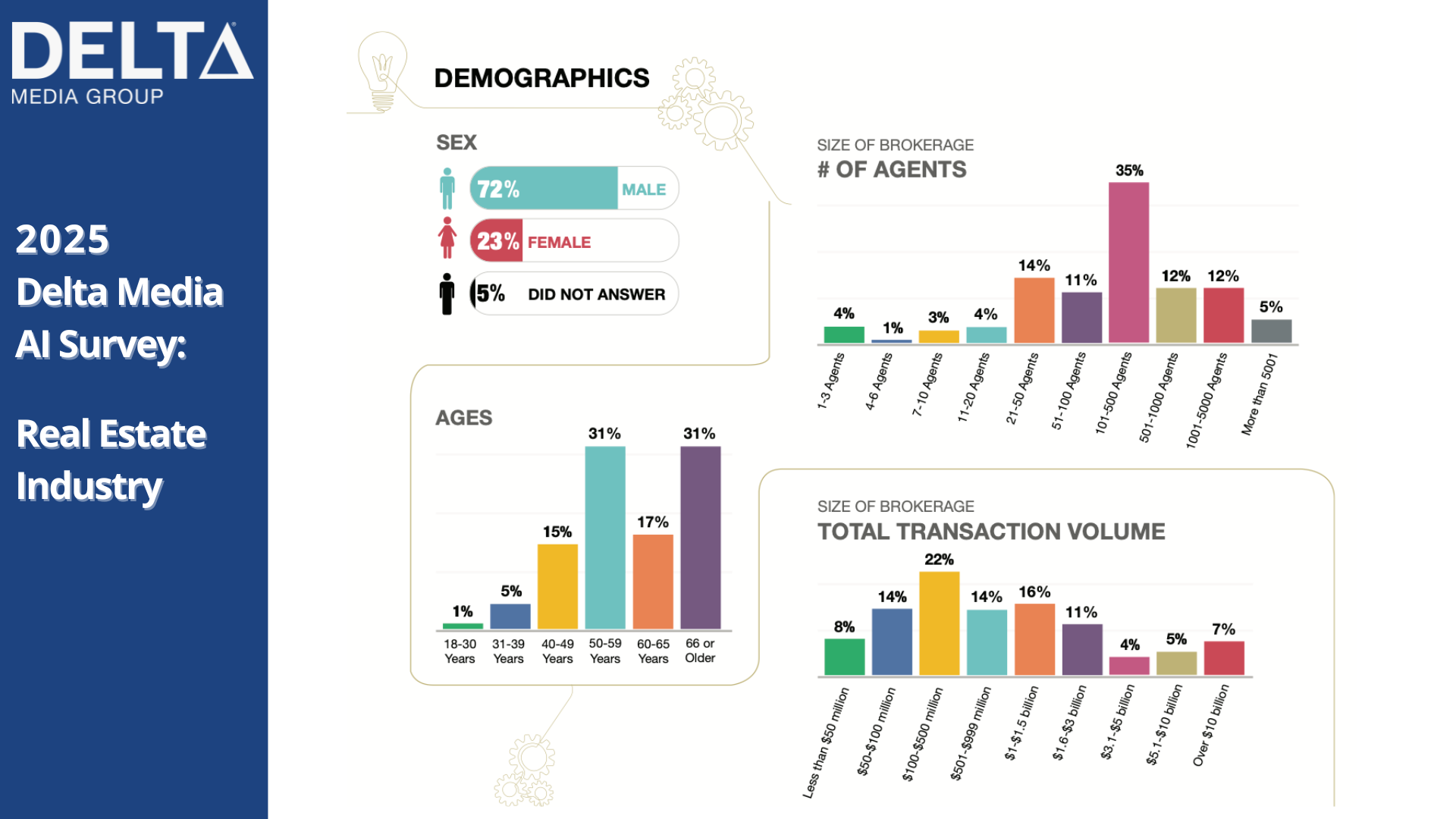

In a revealing report by RISMedia, the 2025 Real Estate Leadership AI Survey conducted by Delta Media Group highlights the sweeping integration of artificial intelligence within the real estate sector. The survey indicates that nearly 90% of brokerage leaders now report their agents’ active use of AI tools, illustrating a 7% increase from the previous year.

Michael Minard, CEO and owner of Delta Media Group, underscores the transformative nature of AI, stating, “AI is no longer a new shiny object; it’s fast become an irreplaceable tool for brokerages and agents alike.” This shift is evident as AI’s role expands beyond marketing and content creation to encompass customer support and administrative automation.

The survey, which gathered insights from over 100 residential brokerage leaders responsible for more than half of all US real estate transactions, reveals a remarkable change in AI adoption and perception. Leaders rated AI’s current significance at 5.9 out of 10, up from 5.0 in 2024, with future importance anticipated to rise to 7.2—a 22% increase.

Key Findings

- Broader Demographic Adoption: Age-based disparities in AI usage have vanished, and gender gaps have narrowed, with both male and female leaders reporting high AI engagement.

- Top AI Use Cases: While creating property descriptions remains the primary application, AI is increasingly used for digital marketing, client communications, data analysis, and administrative task automation.

- Easing Risk Concerns: The percentage of leaders highly concerned about AI risks fell from 50.4% in 2024 to 42.2% in 2025.

- Operational Shift: Brokerages are moving from marketing-focused AI applications to broader operational uses, reflecting a strategic shift toward holistic technology integration.

Medium to large brokerages, with their scale and resources, are leading AI adoption, while smaller brokerages face challenges that limit widespread implementation. As we look to the future, Delta anticipates 2025 as a pivotal year for AI in real estate, with expanded usage in automation and customer service poised to redefine operational efficiency.

For a comprehensive understanding, access the full report here.

The 20 Fastest Growing Cities in the US: A Closer Look

The 20 Fastest Growing Cities in the US: A Closer Look

In a rapidly evolving landscape, the United States is witnessing unprecedented growth in certain metropolitan areas. The latest report from Exploding Topics delves into the top 20 fastest-growing cities and metros across the nation, revealing intriguing trends and factors driving this expansion.

Austin, Texas: The Lone Star Leader

Austin, Texas, has emerged as the fastest-growing metro area in the United States. With a current metro population of 2,473,275 and a growth rate of 25.84%, Austin is becoming a hub of diversity and innovation. The city’s thriving tech scene, bolstered by major players like Apple and Tesla, has attracted a wave of new residents. The University of Texas at Austin also plays a pivotal role in fostering a vibrant, youthful community.

Raleigh, North Carolina: Tech and Talent

Raleigh’s growth is driven by its strong educational institutions and burgeoning tech industry. The Research Triangle is home to prominent universities and tech companies, drawing talent from across the globe. With a metro growth rate of 19.84%, Raleigh is a city on the rise, offering a blend of cultural and professional opportunities.

Orlando, Florida: Beyond the Theme Parks

While Orlando is famous for its theme parks, its growth story extends beyond tourism. The metro area has seen a 19.69% increase in population, driven by a robust healthcare sector and a thriving retirement industry. According to a recent study, Florida’s appeal to retirees continues to fuel its expansion.

Charleston, South Carolina: A Historic Gem

Charleston blends history with modern growth, experiencing an 18.5% increase in its metro population. Known for its charm and coastal beauty, the city attracts both tourists and new residents. Charleston’s economy is diverse, with a mix of tourism, manufacturing, and technology sectors contributing to its growth.

Houston, Texas: A Giant on the Move

As one of the largest cities in the US, Houston’s metro area has grown by 17.36%. The city’s diverse economy, including a strong energy sector, continues to draw people from across the country. Despite challenges like hurricanes and heat, Houston remains an attractive destination for families and professionals alike.

Sarasota-Bradenton, Florida: Coastal Growth

The Sarasota-Bradenton area has seen a 16.81% increase in its metro population. Known for its beautiful beaches and cultural amenities, this area is a magnet for retirees and tourists. The local economy is bolstered by a strong tourism industry and a growing healthcare sector.

San Antonio, Texas: Military and More

San Antonio’s growth is anchored by its military presence and a diverse economy. With a 16.59% increase in its metro population, the city offers affordable living and a rich cultural heritage. Tourism, healthcare, and manufacturing are key sectors driving San Antonio’s expansion.

Dallas-Fort Worth, Texas: The Metroplex

The Dallas-Fort Worth Metroplex continues to thrive, with a 16.58% growth rate. This sprawling area is a hub for business, culture, and education. Major corporations and a vibrant arts scene make DFW an attractive destination for newcomers.

Phoenix, Arizona: The Valley of the Sun

Phoenix’s warm climate and growing economy have contributed to a 15.61% increase in its metro population. The city is a magnet for retirees and young professionals alike, offering a range of opportunities in healthcare, finance, and technology.

Nashville, Tennessee: Music City

Nashville’s reputation as a music and cultural hub is complemented by its economic growth. With a 15.35% increase in its metro population, the city attracts talent from various industries, including healthcare, education, and entertainment.

These cities represent a dynamic shift in the US urban landscape, driven by factors such as climate, economic opportunities, and cultural attractions. As the nation continues to evolve, these metros stand out as beacons of growth and innovation.

Fenton Township Rezoning Approval Paves Way for New Developments

Fenton Township Rezoning Approval Paves Way for New Developments

In an impactful decision, the Fenton Township Board has approved a rezoning measure that could significantly reshape the local landscape. This decision, reached by a 5-2 vote, allows Miller Industries to move forward with its ambitious development plans over a 60-acre area. The rezoning transforms land previously designated for agricultural, commercial, and residential uses into a commercial and industrial park. This move is expected to bring new hotels, restaurants, and other businesses to the area, promising a substantial economic boost.

Economic Growth and Job Creation

The development plans include the construction of a 270,000-square-foot industrial building and a 30,000-square-foot office space. Miller Industries, a family-owned air supply system manufacturer, anticipates that this project will create an additional 250 jobs, bringing their total employment to 550. Co-founder Matt Miller expressed pride in the company’s investment, highlighting the positive impact on the community.

“We’re going to add another 250 jobs,” Miller stated. “I like to think that we’re doing something good for the community instead of taking something away.”

Concerns Over Property Values

Despite the potential economic benefits, the rezoning decision has not been without controversy. During a public hearing, residents expressed concerns about the impact on local property values. Fenton Township resident Jason Lonsbury requested a property value impact study, citing worries about how the development might affect his smaller parcel of land.

“While we are not opposed to increased development in the area, we would like it to occur in a way that benefits existing homeowners,” Lonsbury remarked, emphasizing the need for careful consideration of long-standing residents’ interests.

Next Steps and Approvals

The township planning commission is set to review a preliminary site plan for the new warehouse and office building. If approved, Miller Industries will proceed to the engineering phase, which includes seeking approval from the Genesee County Road Commission and obtaining necessary permits for water and sewer.

For those interested in following the developments, township meeting agendas and the preliminary site plan are available online at fentontownship.org.

Related Developments

For further insights into the ongoing changes in Fenton Township and surrounding areas, readers can explore related articles such as the New Flushing Area Library location and the Beecher schools superintendent’s goals.

Vietnamese Real Estate Market: Transforming Amidst Global Trends

Vietnamese Real Estate Market: A Transformative Era

The Vietnamese real estate market is currently at a crossroads, aligning itself with global economic trends and presenting both challenges and opportunities. As property prices rise and demand surges, particularly from international investors, industry experts express optimism about the sector’s future trajectory.

Tran Minh Tu, a renowned real estate analyst, underscores Vietnam’s burgeoning potential: “Vietnam’s real estate market is on the verge of significant growth as foreign investments pour in.” This sentiment, widely echoed across the industry, is largely driven by Vietnam’s increasingly favorable economic environment as it recovers from the pandemic’s impacts.

Urban centers such as Ho Chi Minh City and Hanoi are experiencing notable shifts in market dynamics, propelled by persistent demand, especially in the residential sector. Le Thi Hoa, the Deputy Minister of Construction, highlights, “Despite the challenges, the demand for residential properties remains strong, especially among first-time homebuyers.” This observation mirrors the experiences of many locals who are drawn to new housing developments and urban expansion.

The fluctuations in real estate prices have been remarkable over the past few months. January 2025 witnessed a significant increase in property prices, particularly in anticipation of the Vietnamese Lunar New Year. Many predict this trend will continue as potential buyers rush to invest before the festivities.

Government initiatives supporting homeownership for first-time buyers have played a crucial role. Incentives such as lower interest rates and streamlined mortgage processes have invigorated purchasing activity. Improvements in the nation’s economic stability and infrastructure have further buoyed investor confidence, marking this as an opportune moment for investment.

However, the market is not without its challenges. Rising interest rates may deter some buyers, making mortgages less affordable. Additionally, strict regulations concerning housing development and environmental considerations have resulted in delays and increased project costs. These factors could temper investor enthusiasm, even amidst rising prices.

The Vietnamese real estate sector stands poised for both growth and challenges, navigating the complex interplay of local and global economic influences. Government policies will undoubtedly shape these dynamics, striving to balance growth with sustainable practices.

The first quarter of 2025 is anticipated to be particularly insightful for the market, with significant developments and policy updates expected post-Lunar New Year. Investors remain optimistic for profitable returns, particularly as the international economic landscape stabilizes.

In summary, observed trends suggest continued upward pressure on property prices, driven by a persistent demand-supply gap, especially for affordable housing. The ongoing influx of foreign investment, alongside robust local demand, is set to dictate the market’s direction.

With an optimal mix of policies, investment, and demand dynamics, the Vietnamese real estate market could not only recover but thrive, potentially achieving unprecedented growth rates and making history.

For further details, refer to the original article on Evrim Ağacı.

AI Revolutionizes the Real Estate Market

AI Revolutionizes the Real Estate Market

The real estate industry, traditionally known for its conservative approach, is undergoing a seismic shift, thanks to the transformative power of artificial intelligence (AI). As reported in a recent Forbes article, AI is not just a buzzword; it’s a catalyst reshaping the landscape of property transactions, management, and investment strategies.

The real estate industry, traditionally known for its conservative approach, is undergoing a seismic shift, thanks to the transformative power of artificial intelligence (AI). As reported in a recent Forbes article, AI is not just a buzzword; it’s a catalyst reshaping the landscape of property transactions, management, and investment strategies.

Market Growth and AI Adoption

AI’s impact on real estate is evident in the staggering market growth. In 2022, the AI real estate market was valued at approximately $163 billion. Fast forward to 2023, this figure soared to around $226 billion, marking an annual growth rate of over 37%. This growth is a testament to the industry’s increasing reliance on AI technologies to drive efficiency and innovation.

Efficiency and Innovation in Property Transactions

The integration of AI into real estate processes has brought about unprecedented efficiency. Agents and brokers, once reliant on manual processes and personal networks, are now leveraging AI to automate lead generation and refine property valuations. AI algorithms analyze user behavior and demographic data, identifying potential clients with a high propensity for property transactions.

Transformative Trends in Real Estate

AI is not just enhancing existing processes; it’s introducing entirely new paradigms in market forecasting and risk assessment. Predictive AI tools are enabling real estate professionals to uncover patterns and trends that might elude human analysts, thereby informing more strategic investment decisions. Additionally, AI-powered models are revolutionizing property valuation by considering a wider range of data, from market trends to economic factors.

AI’s Growing Influence

The 2024 New Delta Media Survey reveals that 75% of leading brokerages in the U.S. have already adopted AI technologies. This widespread adoption underscores AI’s growing influence and the industry’s recognition of its potential to drive competitive advantage.

Future Outlook: AI as a Necessity

As AI continues to integrate into the real estate sector, its adoption is increasingly seen as essential for staying competitive. The shift towards digital and personalized real estate experiences is driven by tech-savvy consumers, particularly Millennials and Gen-Z, who expect more from their property transactions.

Despite being in its early stages, the integration of AI in real estate holds immense potential for growth and innovation. With 45% of venture-backed companies still in early development phases, the sector is ripe for exploration and advancement.

Conclusion

In conclusion, as AI continues to revolutionize the real estate industry, early adopters are reaping the benefits of increased efficiency, accurate valuations, and enhanced customer service. The future of real estate is undeniably intertwined with AI, making it imperative for industry players to embrace and adapt to this technological evolution.

Real Estate Market: A Decade of Transformation

Real Estate Market: A Decade of Transformation

The past few years have witnessed a dramatic escalation in housing prices, largely driven by the pandemic and historically low interest rates. As we look toward the next decade, the burning questions remain: will this upward trend persist, and how will emerging technologies and demographic shifts influence the market?According to Norada Real Estate Investments, the real estate landscape is poised for significant evolution, characterized by several key trends. Let’s delve into the future of the housing market and explore what lies ahead.

The Emergence of Hybrid Homes

The concept of the “hybrid home” is set to redefine residential living. Beyond merely incorporating a home office, these homes will feature flexible spaces that cater to work, play, and relaxation. Expect an increased emphasis on well-being, with natural light, indoor-outdoor flow, and smart home features becoming essential components.Tech-Powered Real Estate

Technology will continue to revolutionize real estate. Virtual and augmented reality will transform property tours into immersive experiences, while AI-driven insights will offer personalized recommendations and market forecasts. Blockchain technology is also expected to streamline transactions and enhance security.Urban Landscapes Reimagined

Cities will undergo a transformation with a focus on mixed-use developments, fostering vibrant, walkable communities. The “15-minute city” concept will gain traction, promoting sustainability and convenience by ensuring essential services are within a short distance.Climate Considerations

Environmental concerns will take center stage in real estate. Sustainable construction practices, water conservation, and resilient home designs will become standard as the industry adapts to climate change.The Affordability Challenge

Affordability remains a pressing issue. Government interventions and innovative housing models like co-living and modular homes may provide relief. A shift in mindset, prioritizing starter homes and inclusivity, will be crucial.Forecasting Home Prices by 2030

A study by RenoFi predicts the average price of a single-family home in the U.S. could reach $382,000 by 2030. However, this varies by location, with cities like San Francisco potentially seeing values exceed $2 million. The study suggests a continued rise in prices, driven by supply and demand dynamics.Preparing for the Future

Aspiring homeowners are advised to start saving early and consider investing to combat inflation. Long-term financial planning will be key to navigating the evolving market and achieving the dream of homeownership.As we look ahead, the real estate market promises to be a dynamic arena, shaped by technological advancements, demographic shifts, and environmental considerations. For more insights, explore related predictions from Norada Real Estate Investments, including housing market predictions for the next four years.

The PropTech Revolution: Transforming Real Estate with Innovation

The PropTech Revolution

The challenges faced by the PropTech industry are vast, ranging from data security and regulatory compliance to the implementation of sustainable practices. Yet, technologies such as Artificial Intelligence (AI), Virtual Reality (VR), Internet of Things (IoT), and Blockchain are pivotal in addressing these hurdles. These advancements not only enhance customer experiences but also reduce operational costs and promote sustainable practices.

Startups are at the forefront of this technological wave. Consider Mirage Virtual Reality, which simplifies consumer interactions with 3D virtual property tours. Meanwhile, BlueUrbn is making strides in energy efficiency by reducing carbon emissions and cutting maintenance costs. The integration of cloud computing and big data analytics is further digitalizing property management, leading to significant cost reductions.

Why This Report Matters

- Understand the top 10 technologies that are transforming PropTech companies.

- Explore three practical use cases for each technology.

- Discover 10 groundbreaking startups that are driving these technologies forward.

The report underscores the necessity for stakeholders in real estate to embrace these emerging technologies. By doing so, they can optimize resource allocation, improve service quality, and meet evolving customer expectations. The insights provided illustrate how these technologies enhance efficiency, economize resources, and elevate customer engagement.

Technological Integration in Real Estate

Utilizing AI and machine learning, real estate firms can conduct market analysis, property valuations, and understand tenant preferences. Blockchain technology ensures secure property transactions, while AR and VR facilitate virtual property tours, making property visualization more accessible.

For those keen on exploring further, related articles such as the Emergency and Disaster Management Market Report 2025 and the Lighting Market Report 2025 provide additional insights into how technology is influencing other sectors.

Conclusion

As we look to the future, the PropTech industry is poised for further transformation, driven by technological innovation. By staying informed and integrating these advancements, stakeholders can ensure they remain competitive and sustainable in this rapidly evolving sector.

Emerging Neighbourhoods: The UK’s Next Property Hotspots

Emerging Neighbourhoods: The UK’s Next Property Hotspots

As property prices in traditional hotspots continue to ascend, a new trend is emerging among savvy investors and homebuyers across the UK. These individuals are setting their sights on emerging neighbourhoods, which offer a unique blend of affordability, growth potential, and improved amenities. This shift is reshaping the landscape of the British property market, uncovering hidden gems that promise significant returns.Local estate agents in Shropshire have observed a remarkable surge in interest from buyers eager to find value beyond the usual market hotspots. The county’s market towns, such as Bridgnorth and Ludlow, are gaining traction due to their historic architecture and strong community spirit. These areas offer a perfect blend of rural charm and urban convenience, attracting both local buyers and those relocating from pricier regions.

The rise of hybrid working is fundamentally altering what makes a neighbourhood desirable. Areas once deemed too distant from major employment hubs are now attracting professionals who only need to commute occasionally. This shift is creating new property hotspots in previously overlooked locations that offer larger homes and better value for money.

Cultural regeneration is playing a crucial role in transforming formerly industrial areas into vibrant communities. Cities like Hull and Middlesbrough are witnessing significant investment in arts venues, independent businesses, and public spaces. These cultural improvements are often early indicators of broader neighbourhood regeneration and property price growth.

Transport infrastructure developments continue to be a reliable predictor of future property hotspots. Areas benefiting from new or improved rail links, particularly those reducing journey times to major employment centres, are seeing increased buyer interest well ahead of project completion. This pattern of infrastructure-led growth offers opportunities for early investors to benefit from future price appreciation.

The growth of university cities is creating new property hotspots in unexpected locations. As universities expand and attract more international students, surrounding neighbourhoods are seeing increased demand for both student accommodation and professional housing. This trend is particularly evident in cities like Exeter and Lincoln, where university investment is driving broader urban regeneration.

Coastal towns are experiencing a renaissance as more people seek a better work-life balance. Previously overlooked seaside locations are being rediscovered, particularly those offering good digital connectivity and transport links. Towns like Margate and Hastings are attracting creative communities and remote workers, leading to property price growth and cultural rejuvenation.

Green spaces and environmental factors are increasingly influencing property choices. Neighbourhoods with good access to parks, nature reserves, and cycling infrastructure are seeing growing demand. This trend is particularly noticeable in urban areas where green spaces are at a premium, making neighbourhoods with superior environmental amenities increasingly desirable.

The rise of creative quarters in former industrial areas is creating new property hotspots. Areas with character buildings and potential for conversion are attracting artists, entrepreneurs, and creative businesses, often leading to wider neighbourhood regeneration. This pattern of creative-led regeneration has been particularly successful in cities like Manchester and Newcastle.

Investment in education is another key driver of emerging property hotspots. Areas with improving schools are seeing increased demand from families, often leading to property price growth that outpaces the wider market. This trend is particularly evident in suburban areas where school quality can vary significantly between neighbourhoods.

Town centre regeneration projects are creating opportunities in previously overlooked locations. As local authorities invest in improving public spaces, transport links, and amenities, surrounding residential areas often see increased demand and property price growth. These improvements can transform the character and desirability of entire neighbourhoods.

Looking ahead, identifying future property hotspots requires careful analysis of various factors, from infrastructure investments to demographic trends. The most successful emerging neighbourhoods often combine multiple positive factors, such as improving transport links, cultural amenities, and environmental quality. For buyers and investors, the key is to identify areas showing early signs of positive change before prices fully reflect their improving prospects.

For more insights, the original article from PropertyWire offers a comprehensive overview of this evolving trend.

2025 Commercial Real Estate Outlook: Navigating a New Era

2025 Commercial Real Estate Outlook: Navigating a New Era

The commercial real estate sector is poised to emerge from recent tumultuous years, armed with insights and strategies to better position itself for the future. According to a detailed analysis by Deloitte, the 2025 outlook offers a roadmap for industry leaders to navigate the evolving landscape.

In a year marked by significant economic shifts, the United States Economic Forecast for Q2 2024, along with insights from the Eurozone and India, has set the stage for a transformative period in real estate. The Bank of England’s rate cut and the Federal Reserve’s potential adjustments have further influenced this dynamic sector.

Key Trends and Insights

The report highlights several key trends that are shaping the commercial real estate landscape:

- Reshoring and Nearshoring: The shift towards reshoring in North America and the nearshoring boom in Mexico are driving industrial real estate growth.

- Technology and Sustainability: The demand for data centers is surging, fueled by AI advancements, while sustainability efforts are increasingly critical.

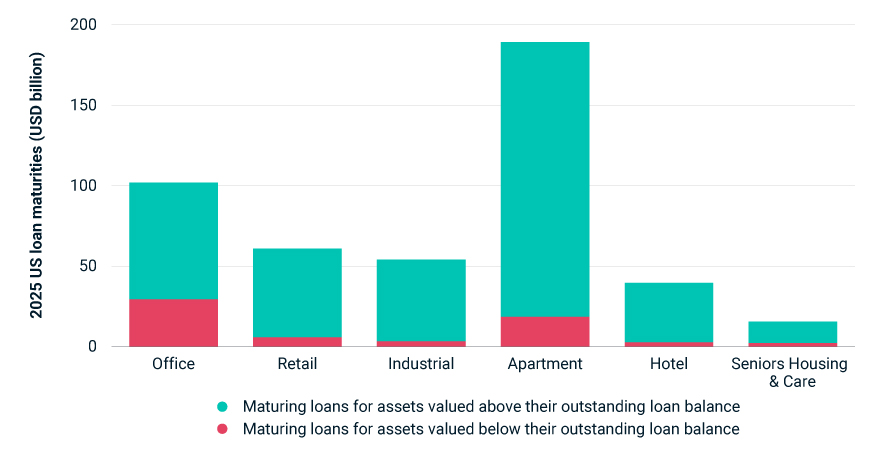

- Loan Maturities: A wave of property-loan maturities, as discussed in the MSCI Real Capital Analytics report, presents both challenges and opportunities for the sector.

As the industry grapples with these changes, the office sector remains a focal point, with evolving work patterns demanding innovative solutions.

Strategic Positioning for the Future

The preparation for future talent needs is paramount as the workforce faces a generational shift. Emphasizing skill-based hiring and leveraging AI-ready data are crucial steps for organizations to stay competitive.

In conclusion, the 2025 commercial real estate outlook by Deloitte provides a comprehensive guide for industry leaders to not only weather the current challenges but to also seize emerging opportunities. As the sector stands at a crossroads, embracing these insights will be key to thriving in the new era of real estate.

Navigating the New IRS 1099-K Reporting Rules: What Freelancers and Small Business Owners Need to Know

This adjustment is intended to streamline tax monitoring and compliance, affecting millions of users engaged in the gig and sharing economies. It’s crucial to understand that income must be reported to the IRS regardless of whether you receive a 1099-K. Many platforms are already notifying users of these changes and are beginning to differentiate between business and personal transactions. Some states, like Maryland and Massachusetts, are implementing even stricter reporting thresholds.

If you find yourself affected by this shift, it is essential to report your earnings accurately. In the event of discrepancies, such as receiving a 1099-K for non-business transactions, corrections can be requested. Keeping meticulous records and consulting professionals can help navigate this evolving tax landscape effectively.

For more detailed information, including insights from tax experts like Mark Steber from Jackson Hewitt, and resources on managing new tax obligations, refer to the full article on CNBC’s website and other linked resources.

Understanding the 1099-K Form

The Form 1099-K is a critical document for reporting income received through payment apps, online marketplaces, or gift cards. As the IRS starts implementing new reporting requirements, understanding this form becomes increasingly crucial for those using platforms like Venmo or PayPal.Who Will Receive a 1099-K?

Starting in 2024, if you earn more than $5,000 through third-party payment apps, you will receive a 1099-K form. This new threshold means far more people with side hustles, home businesses, and other gigs will be receiving these forms. According to a 2023 Government Accountability Office report, fully implementing the $600 threshold in 2027 will result in an additional 30 million Forms 1099-K issued annually.What to Do If You Receive a 1099-K

For the 2024 tax year, if you receive a 1099-K, you should report this income on your 1040 Schedule C, Profit or Loss From Business. It’s advisable to open a separate business account on the appropriate app to keep funds distinct, regardless of IRS requirements.If you receive a 1099-K incorrectly, you can ask the provider for an amended form with an explanation of what is wrong. You can also just enter the correct amount on your 1040’s Schedule 1. If a payment listed on a 1099-K doesn’t reflect a true taxable gain, gather receipts and other documentation to support your case.

Consulting Professionals

Navigating these changes can be complex, especially for gig workers, self-employed individuals, or small business owners. Consulting a tax professional or using reliable tax-prep software can help offset liabilities, such as travel expenses or home office costs.For more insights, visit the IRS Tax Reporting page and explore resources provided by platforms like PayPal, Venmo, and Cash App.

Forecasting the Future: Housing Market Insights for 2025 to 2028

Forecasting the Future: Housing Market Insights for 2025 to 2028

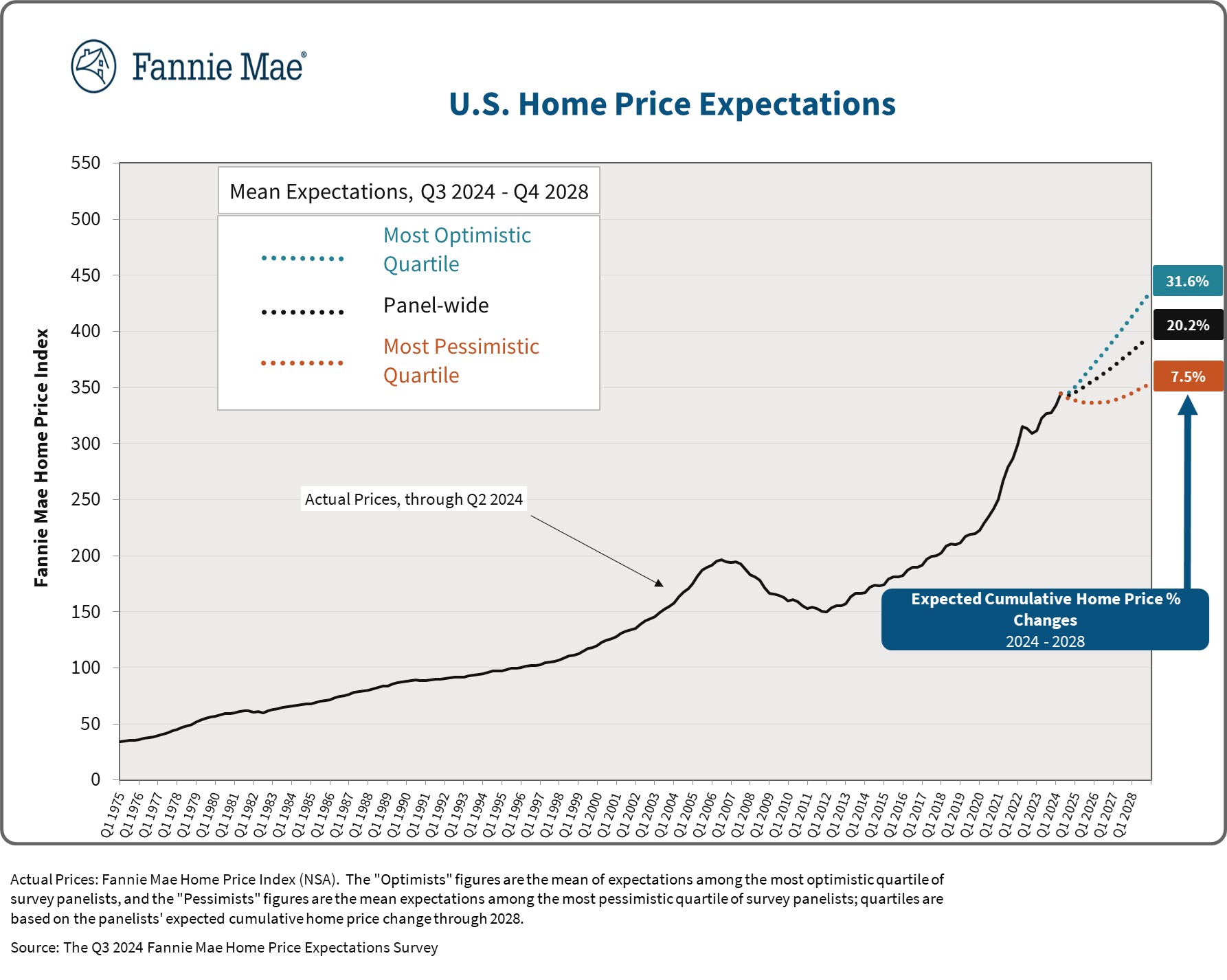

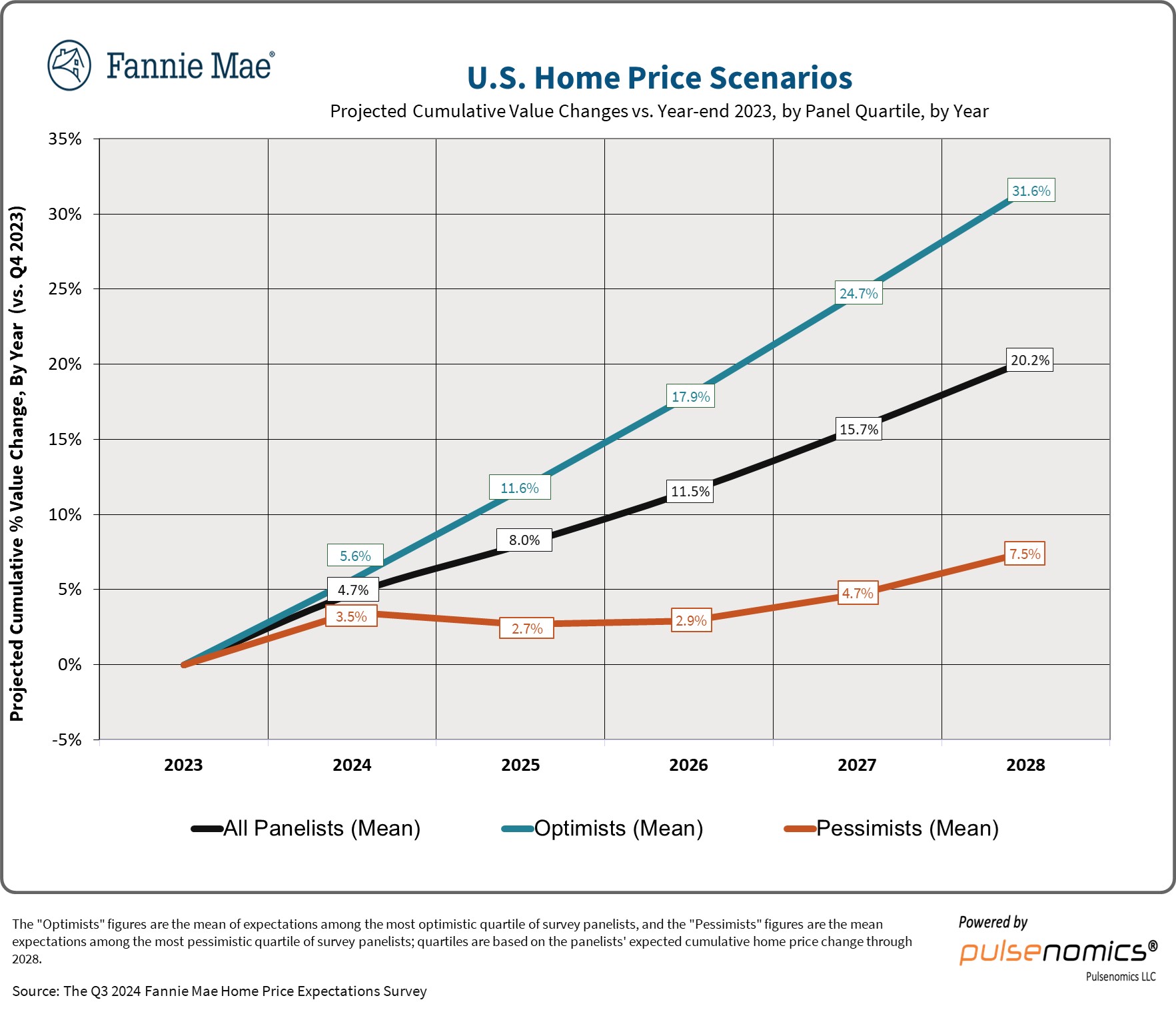

As we look to the horizon of the U.S. housing market, Fannie Mae’s Home Price Expectations Survey offers a crucial glimpse into the coming years. Compiled from the insights of over 100 housing experts, this survey predicts notable changes in home prices from 2025 to 2028. The analysis, originally detailed by Norada Real Estate Investments, suggests a shift in market dynamics that could impact homeowners and investors alike.

Slowing Growth in Home Prices

The survey anticipates a slower pace of home price growth in the coming years compared to the robust 6% increase seen in 2023. For 2024, experts forecast a 4.7% growth, with a further decline to 3.1% in 2025. This trend reflects a potential cooling of the market, influenced by policy changes and ongoing supply constraints.

Diverging Predictions and Market Uncertainty

The panel’s projections reveal a wide range of outcomes, from optimistic to pessimistic scenarios. By the end of 2028, the most optimistic forecasts suggest a 31.6% cumulative gain in home prices, while the most pessimistic predict only a 7.5% increase. This divergence highlights the uncertainty and complexity of the market’s future.

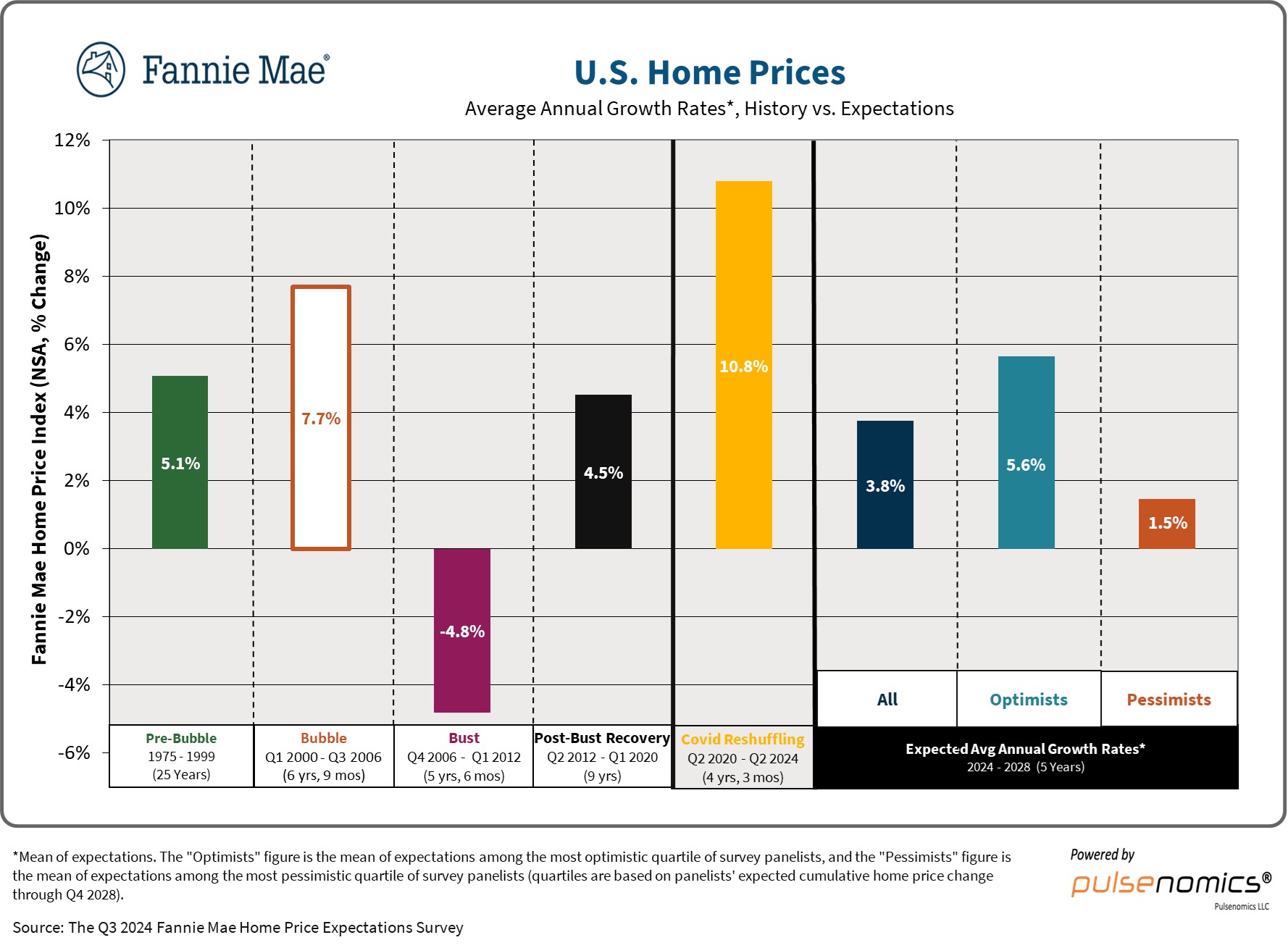

Historical Context and Future Implications

Examining historical data from key periods such as the “Pre-Bubble”, “Bubble”, “Bust”, and “Covid Reshuffling” phases, the survey provides a comprehensive view of market fluctuations. These insights are invaluable for buyers, sellers, and investors, each facing unique challenges and opportunities in light of the projected trends.

- For Buyers: Expect a more moderate pace of price appreciation and continued affordability challenges due to the housing shortage.

- For Sellers: Anticipate slower home price appreciation and a potentially more balanced market.

- For Investors: Returns might moderate, but rental demand is likely to remain strong.

The Role of Policy and Supply Constraints

The persistent shortage of housing remains a major issue, with an estimated deficit of approximately 2.8 million homes. Potential policy reforms, such as zoning and permitting changes, could positively impact housing supply, but there is skepticism about their widespread adoption and effectiveness.

The Path Ahead

While home prices are expected to continue their upward trend, albeit at a reduced pace, the future of the housing market remains intricately linked to external factors such as policy reform and economic conditions. For a deeper understanding, readers are encouraged to explore the original article and related reports provided by Norada Real Estate Investments.

The AI Revolution in Real Estate: A New Era of Market and Property Insights

The AI Revolution in Real Estate: A New Era of Market and Property Insights

The real estate industry, long perceived as conservative and slow to adapt, is now on the cusp of a technological revolution. At the forefront of this transformation is the integration of artificial intelligence (AI), particularly in the realms of market prediction and property valuation.

In a recent Forbes article, Andrei Kasyanau, co-founder and CEO of Glorium Technologies, discusses the burgeoning role of AI in real estate. While generative AI has already made significant inroads in real estate marketing, enhancing customer journeys and content creation, it is predictive AI that is poised to reshape the industry. This technology leverages historical data and complex algorithms to anticipate market trends and accurately forecast property values.

How Predictive AI Works

Predictive analytics in real estate is built on a foundation of vast data sets and sophisticated algorithms. By analyzing historical sales data, demographic information, and economic indicators, AI systems can identify patterns and make forecasts. Companies like Compass and Zillow are already harnessing these tools to gain a competitive edge. For instance, Compass has developed a machine learning-driven recommendation system, “Likely to Sell,” which aids agents in identifying potential sellers before their homes are listed.

During the Compass Q2 2024 earnings conference call, CEO Robert Reffkin highlighted the company’s AI model, which currently describes 7% of the market, as a tool for market forecasting and further extrapolation.

Predicting Market Trends

AI’s ability to predict market trends with remarkable accuracy is one of its most powerful applications. A striking example is how AI models predicted the post-pandemic suburban boom. Zillow, utilizing data from the U.S. Census Bureau, anticipated that remote work would drive urban renters to purchase homes in suburban areas. This prediction proved prescient, as evidenced by a surge in suburban home purchases following the pandemic.

Beyond large-scale shifts, AI can forecast price fluctuations and market cycles, analyzing factors such as interest rates and employment data. This level of insight is invaluable for investors, developers, and homebuyers.

Enhancing Property Valuation Accuracy

AI also plays a crucial role in property valuation. By combining human insights with data analysis and predictive modeling, AI-driven valuation models estimate property values with unprecedented accuracy. These models analyze comparable sales, property characteristics, and market trends, uncovering data patterns beyond human perception.

A recent project led by Glorium Technologies exemplifies the power of AI in property valuation. They developed a deep learning model capable of predicting property prices using real-time market data, allowing a real estate organization to identify undervalued properties and make informed decisions.

Challenges and Limitations

Despite its benefits, implementing AI in real estate is not without challenges. Data quality and availability can hinder progress, as AI models are only as effective as the data they are trained on. Moreover, there is often a lack of AI expertise within real estate organizations, underscoring the need for dedicated AI specialists.

As AI technology continues to evolve, its impact on real estate will only grow. The industry must embrace these changes to harness the full potential of AI-powered predictive analytics. Those who succeed will be well-positioned to thrive in the rapidly evolving real estate landscape.

Remote Working’s Transformative Impact on India’s Real Estate

Changing Residential Preferences

Remote work has redefined what homebuyers seek in a property. The demand for larger living spaces has surged as individuals prioritize homes that can accommodate both living and working activities. A Knight Frank report highlights that over 70% of homebuyers now consider a dedicated home office crucial. This trend is further supported by the necessity for high-speed internet connectivity, which has become indispensable in this digital age. Moreover, access to green spaces is increasingly important. The pandemic has heightened awareness of the benefits of nature, driving a desire for homes near parks and serene environments.Suburban and Rural Appeal

The allure of suburban and rural areas is growing, primarily due to affordability and space. Cities like Pune, Nashik, and Coimbatore are becoming popular choices as they offer a balance of cost-effective housing and connectivity to urban amenities. The National Housing Bank reports a 12% rise in Pune’s housing prices, driven by remote workers seeking larger homes at lower costs.Commercial Real Estate Evolution

The commercial sector is not immune to these shifts. The rise of co-working spaces and flexible office environments reflects the adoption of hybrid work models. As the JLL report suggests, co-working spaces in India are projected to grow by 30% annually. This trend offers scalability and reduced overheads, appealing especially to startups and small businesses. Rental yields in traditional office spaces have seen a decline, as evidenced by an 8% drop in Gurugram. This shift underscores the growing preference for flexible workspace solutions.Technological Integration

Technology is at the forefront of this transformation. Digital platforms, virtual tours, and data analytics are revolutionizing property transactions and management. These advancements are not only streamlining operations but also enhancing the customer experience.Future Prospects and Government Support

The future of real estate in India is intertwined with government initiatives like the Digital India Initiative, enhancing internet connectivity, especially in rural areas. Such policies are essential in supporting the remote work revolution. As urban development evolves, mixed-use developments and sustainable growth models are expected to dominate. These changes promise to create vibrant, decentralized communities that offer a high quality of life. In conclusion, the rise of remote working is not just a trend but a catalyst for reshaping the real estate investment landscape in India. As preferences continue to evolve, staying informed about these dynamics will be crucial for investors and developers aiming for long-term success.Navigating the Commercial Real Estate Terrain in 2025: Challenges and Renewed Opportunities

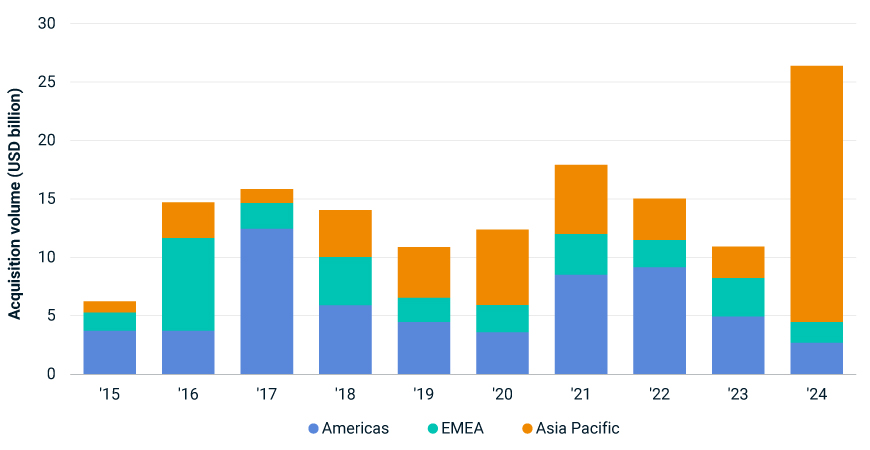

In 2024, interest rates began to decline, leading to a stabilization in transactional activity and the reemergence of asset-value growth in certain segments. However, the recovery is uneven, with different areas of the market moving at varied paces. This presents both opportunities and risks for investors, who must navigate a landscape marked by both cyclical and structural changes.

Recovery – Not Everywhere All at Once

The recovery phase, which began in 2024, is still in its infancy. Lower interest rates are expected to help buyers and sellers align more closely on pricing, improving liquidity. Yet, investor preferences are shifting, with a focus on living sectors, industrial assets, and properties aligned with broader socioeconomic and technological trends. A notable transaction in 2024 was Blackstone Inc.’s $16 billion acquisition of data-center operator AirTrunk, underscoring the growing demand for assets that straddle the line between traditional property and infrastructure.

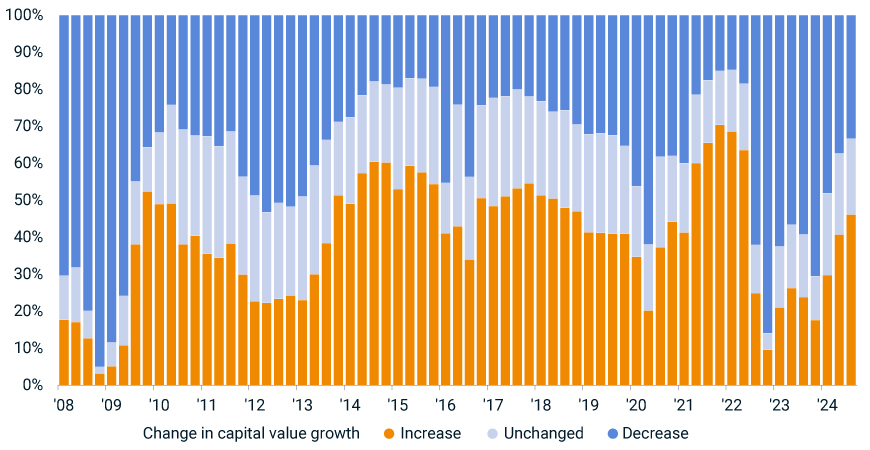

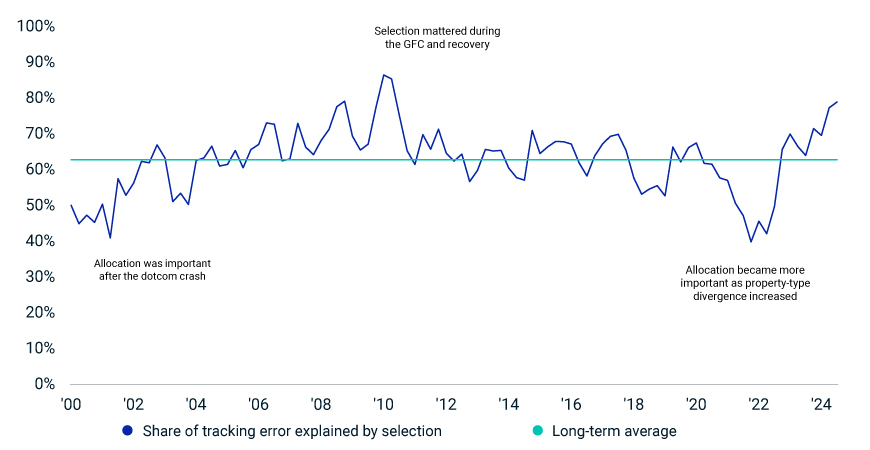

Investment Pendulum Swings Back to Asset Selection

The real estate market is entering a new investment cycle where active asset selection and management are crucial. With market conditions evolving, the traditional playbook for delivering returns is changing. Investors must balance top-down allocation strategies with granular, bottom-up asset-selection decisions. The interplay between these approaches has become more complex, demanding a keen understanding of the drivers of performance.

Underwater Assets Come to Light

Higher interest rates and ongoing price declines have put pressure on borrowers’ ability to refinance commercial-property loans. In the U.S., nearly $500 billion of loans are set to mature in 2025, with about 14% potentially underwater. U.S. offices face particularly bleak refinancing prospects, with nearly 30% of maturing office loans tied to properties worth less than the debt secured against them.

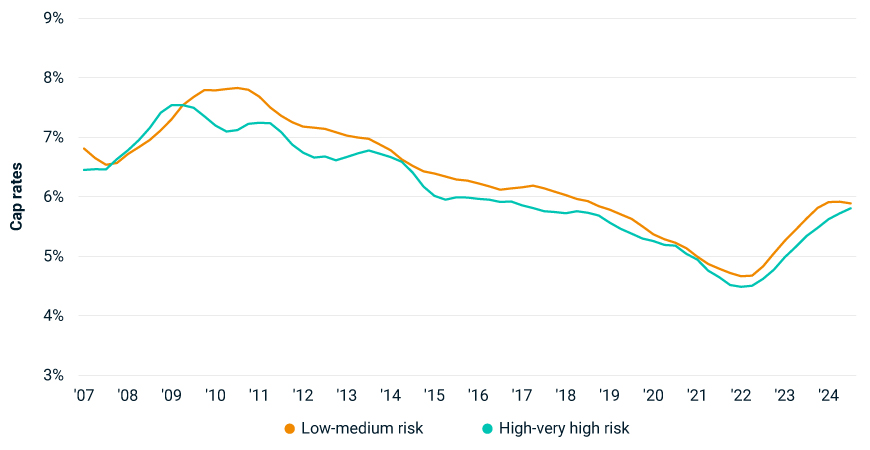

Investors Get to Grips with Physical Climate Risk

Extreme weather events are expected to become more common, affecting real-estate asset values through higher insurance premiums and disruption costs. Despite this, the risk is not yet adequately priced into transaction yields. As climate risks intensify, pricing should adjust to reflect the increased risk to property values.

Property Investors Seek a Ride on the AI Train

The rapid development of AI is driving demand for data centers, transforming the investment landscape. Significant capital is being committed to developing new data centers, with notable deals like Blackstone’s acquisition of AirTrunk. This surge in interest is reshaping market dynamics, with traditional property investors now competing in a space once dominated by infrastructure investors.

As we move further into 2025, the commercial real estate market remains a complex and evolving landscape. Investors must remain vigilant and adaptable, leveraging insights and strategies to navigate the challenges and opportunities that lie ahead.

Generative AI: A New Era for Commercial Real Estate

Generative AI: A New Era for Commercial Real Estate

In the rapidly evolving landscape of commercial real estate, Generative AI (GenAI) is emerging as a transformative force. As companies seek to leverage this cutting-edge technology, they must carefully balance the potential risks and rewards to reshape their organizational strategies.

Revolutionizing Real Estate Operations

GenAI is poised to revolutionize the real estate sector by automating and optimizing a myriad of functions. From property operations and acquisition strategies to investor relations and asset management, the potential applications are vast. This technology allows for lightning-speed data analysis, offering unprecedented insights and efficiency gains.

The original article from EY highlights how GenAI is being compared to the digital transformation wave of the early 2000s, which similarly disrupted industries across the board.

Strategic Vision and Ethical Use

Developing a long-term strategic vision for GenAI is crucial. Companies must ensure they use AI in a safe, responsible, and ethical manner. This involves addressing challenges such as workforce impact, cybersecurity, intellectual property, and potential biases in AI systems.

As noted by Umar Riaz, Managing Director of Real Estate, Hospitality, and Construction Consulting at EY, the key to success lies in creating a robust AI adoption approach. This involves selecting use cases, transforming processes, and building a scalable AI governance framework.

Transformative Applications

The article outlines several transformative applications of GenAI in real estate:

- Acquisitions: Automating due diligence and enhancing portfolio planning.

- Investor Relations: Streamlining communications and targeting potential investors.

- Business Support: Revolutionizing HR, IT, and legal functions.

- Asset Management: Improving data analysis for budgeting and forecasting.

- Finance and Accounting: Enhancing financial reporting and fraud detection.

- Property Operations: Optimizing energy management and tenant services.

Implementing GenAI

Real estate companies are encouraged to develop a comprehensive GenAI approach that includes:

- Use case selection and process transformation.

- Technology roadmap and selection.

- Responsible and ethical AI practices.

- Organizational transformation roadmap.

- Talent transformation and upskilling.

The implementation of GenAI requires a strategic alignment of technology and business goals. Companies must consider foundational models, data storage, and hosting options to effectively deploy AI solutions.

Conclusion

As GenAI continues to transform the real estate industry, companies must navigate the complexities of AI adoption. By balancing risks and rewards, businesses can harness the power of GenAI to drive innovation and efficiency.

For more insights, explore EY’s resources on Real Estate, Hospitality & Construction and Transformation Realized.

Almal Real Estate Expands into Commercial and Global Markets

Almal Real Estate, known for its innovative approaches in the real estate sector, is now setting its sights on international markets, including the UAE, Bali, and Thailand. This expansion is part of a broader strategy to diversify its portfolio and tap into the lucrative commercial real estate sector.

The company’s decision to venture into new verticals and international markets is driven by the growing demand for commercial spaces and the increasing globalization of business operations. By expanding its footprint, Almal Real Estate aims to leverage its expertise in residential development to cater to the commercial sector’s evolving needs.

This move is not just about geographical expansion; it represents a strategic pivot towards a more diversified business model. The company is looking to capitalize on the robust growth prospects in the commercial real estate market, which has been buoyed by increasing urbanization and the rise of new business hubs.

For more details on this development, you can read the original article here.

Strategic Growth Initiatives

The expansion is part of Almal Real Estate’s broader strategic growth initiatives, which include enhancing its operational capabilities and strengthening its market presence. By entering new markets, the company aims to establish itself as a key player in the global real estate landscape.

Impact on the Real Estate Sector

Almal Real Estate’s expansion into commercial and international markets is expected to have a ripple effect across the real estate sector. As the company adapts to the demands of these new markets, it is likely to set new benchmarks in terms of quality and innovation.

This strategic move not only underscores Almal Real Estate’s commitment to growth but also reflects its adaptability in a rapidly changing market environment. As the company embarks on this new journey, it is poised to redefine its role in the global real estate industry.

Transformative Trends in Commercial Real Estate for 2025

Transformative Trends in Commercial Real Estate for 2025

The commercial real estate sector is poised for significant transformation as we move into 2025. This evolution is driven by a confluence of economic shifts, demographic changes, and technological advancements, creating both challenges and opportunities for stakeholders in the industry.

With recent interest rate adjustments by major financial institutions such as the European Central Bank (ECB) and the Bank of England, market dynamics are rapidly evolving. The ECB’s recent rate cuts, as reported by Daniel Cunningham, and the Federal Reserve’s stance on potential rate adjustments, highlighted by Jeanna Smialek from The New York Times, are particularly influencing investment strategies and market confidence.

In this context, the intersection of technology and sustainability is becoming crucial. The growth of artificial intelligence and a focus on decarbonization are driving significant demand for data centers, as emphasized by Kimberley Steele from JLL. Environmental considerations are not only shaping infrastructure developments but also influencing the regulatory landscape, with energy performance standards and retrofitting policies gaining prominence.

The strategic importance of nearshoring is underscored by investments in regions such as Mexico, bolstered by shifts in global supply chains. These actions highlight the broader trend towards enhancing operational efficiencies and sustainability practices across real estate portfolios.

References in the original article underline these points, including insights from Robyn Gibbard on economic forecasts. As the industry navigates through the complex landscape of 2025, the opportunity to redefine strategic priorities is paramount—establishing a pathway not just for resilience but for growth and leadership in the new era of commercial real estate.

Additional Insights

Real Estate Sector Gains Momentum with Budget 2025

Real Estate Sector Gains Momentum with Budget 2025

In a strategic move poised to reshape the landscape of India’s commercial real estate, the introduction of a national guidance framework for Global Capability Centres (GCCs) is set to bolster the sector significantly. This development, highlighted in the Real-Estate Sector Budget 2025, is expected to stimulate growth, particularly in tier-II and tier-III cities.

Sanjeev Dasgupta, Chief Executive Officer of CapitaLand Investment (India), emphasized the framework’s alignment with India’s ambition to become a hub for high-value global operations. By enhancing infrastructure and talent pipelines, this initiative aims to strengthen the country’s ability to host GCCs effectively.

Real estate experts predict substantial opportunities for commercial real estate as a result of this framework. The overarching theme of urban development, prevalent in this year’s budget, underscores a commitment to infrastructure enhancement through increased public-private partnerships (PPP) and comprehensive fiscal reforms.

The budget also introduces tax reforms designed to increase disposable income for the middle class, thereby encouraging investment and consumption. Urban infrastructure initiatives, coupled with support for MSMEs, reflect a broad economic strategy aimed at sustainable urban growth and improved quality of life.

The guidance framework for GCCs, with its focus on boosting commercial real estate, plays a crucial role in the broader efforts to transform India’s economic landscape. This move benefits both investors and the local economy, reinforcing India’s position as a prime destination for global operations.

Key Takeaways

- The national guidance framework targets growth in tier-II and tier-III cities.

- Focus on enhancing infrastructure and talent development in states.

- Budget highlights include increased PPP and infrastructure initiatives.

- Tax reforms aim to bolster disposable income and investment.

Budget 2025: A New Dawn for Middle-Class Homebuyers

Budget 2025: A New Dawn for Middle-Class Homebuyers

The recent announcements in the Budget 2025 have brought a wave of optimism for middle-class homebuyers. In a bid to enhance disposable income and affordability, the Finance Minister has raised the income tax exemption limit to ₹12 lakh, which further increases to ₹12.75 lakh when standard deductions are considered. This strategic move is set to boost household spending power, subsequently driving housing demand and invigorating investments in the real estate sector.Real estate experts suggest that these measures will have a positive impact on both primary and secondary housing markets. As highlighted in Hindustan Times, the government’s streamlined approach to taxation is expected to fortify household purchasing power.

SWAMIH Fund 2: A Lifeline for Stalled Projects

A cornerstone of this budget is the SWAMIH Fund 2, with an allocation of ₹15,000 crore aimed at completing an additional 1 lakh housing units. This initiative is a lifeline for thousands of homebuyers affected by stalled projects. The fund builds on the success of the existing SWAMIH scheme, which has seen 50,000 units completed, showcasing the government’s commitment to resolving the housing crisis. More details can be found in the SWAMIH-2 Investment Fund article.Tax Reforms: A Boon for Landlords and Investors

The Budget also proposes raising the threshold for TDS on rent from ₹2.40 lakh to ₹6 lakh annually. This change is expected to ease compliance burdens on small landlords and taxpayers, as discussed in the TDS limit on rent analysis.In a significant policy shift, investors can now claim a Nil valuation for two self-occupied properties instead of just one. This reform is likely to encourage property ownership and investment, making real estate an attractive option for investors.

Urban Development: Transforming Cities into Growth Hubs

The establishment of a ₹1 lakh crore Urban Challenge Fund is another highlight of the budget. This fund aims to enhance urban infrastructure, unlocking real estate potential and transforming cities into major growth hubs. The urban development fund initiative is expected to significantly impact city planning and governance.Challenges in Affordable Housing

Despite these promising initiatives, the budget has been critiqued for not addressing affordable housing adequately. Rising home loan interest rates and outdated definitions of affordable housing continue to pose challenges for potential homeowners. Experts emphasize the need for a national policy towards rental housing to boost the housing program.Conclusion: A Progressive Shift in Real Estate

The Budget 2025‘s focus on investor-friendly policies and creating a conducive environment for real estate growth is evident. By removing tax on deemed rent for self-occupied properties, the government is aligning with the evolving housing needs of Indian families. This progressive shift not only encourages homeownership but also sets the stage for a revitalized real estate sector.Predictive Analytics: The New Frontier in Commercial Real Estate

Predictive Analytics: The New Frontier in Commercial Real Estate

As the commercial real estate sector navigates a landscape marked by economic uncertainties and technological advancements, a new player has emerged to revolutionize the game—predictive analytics. According to a recent report by JLL, AI and generative AI are among the top technologies reshaping the industry. In 2023 alone, a staggering $630 million was funneled into AI-driven proptech, underscoring its growing importance.Understanding Predictive Analytics Predictive analytics in commercial real estate involves extracting insights from vast data sets, offering a holistic view of market trends and tenant behavior. This technology enables landlords to anticipate market movements and tenant demand, a capability previously out of reach due to the sector’s slower technological adoption.

Real-Time Data in Action The application of predictive analytics is multifaceted, allowing landlords to forecast market activity and prepare for fluctuations. For instance, data from the Leasing Prediction Outlook indicates positive growth signals in New York City and San Francisco. Real-time data aggregation is crucial, as it forms the backbone of predictive insights, helping landlords identify opportunities, manage risks, and maintain a competitive edge.

Challenges and Considerations

While predictive analytics offers significant advantages, it also requires a robust data infrastructure. Landlords must evaluate their current data sources and systems to ensure they support real-time data collection and analysis. Investing in the right tools and working with data analysts can help integrate these insights effectively, despite the initial learning curve.AI-powered solutions, though still nascent in real estate, are essential for developing forward-looking strategies. As the industry continues to evolve, these tools will be vital for navigating the challenges posed by hybrid work models and high interest rates.

For further insights, the original article can be explored on Forbes.